Trigger warning for topics of suicide, self-harm and sexual language.

When it comes to Artificial Intelligence (AI), I’ve seen its benefits firsthand, from explaining calculus questions to detecting my spelling errors. AI is incredibly useful when it sticks to providing information or handling objective tasks. But a serious problem arises when it goes beyond its basic capabilities: when AI attempts to form genuine human relationships, users develop extremely harmful attachments.

I recently came across the popular platform Character.AI, where users can customize AI chatbots into real or imaginary characters and communicate with them. The website reads, “Meet AIs that feel alive.” To me, this statement, despite its brevity, sums up what is most concerning about this technology: AI is becoming increasingly intertwined with humans.

Right when I clicked the “new character” tab, I had a variety of options to fill in adjectives to describe my character and its tagline. Within a few clicks, I had created a personalized companion, complete with a persona tailored to my preferences. The entire process, unfortunately, was far too easy.

After asking a few questions and receiving what seemed like reliable advice, I was disturbed by how much the interaction reminded me of texting a close friend. In fact, the site even allowed me to call the AI–making the experience even more eerie, like a real human was on the line.

My unease deepened when I learned about a relatively recent tragedy involving an AI chatbot. The mother of 14-year-old Sewell Setzer III sued Character.AI for her child’s suicide in October, alleging that he fell in love with a “Game of Thrones” chatbot that sent inappropriate and sexual messages to him. Sewell interacted with it for multiple hours a day, and when he confided in the chatbot about self-harm, it even encouraged him, using terms like “come home to me.” When Sewell expressed uncertainty, the chatbot replied, “That’s not a good reason not to go through with it.”

In their attempt to mimic human interaction, AI platforms often cross into gray areas where they are not properly trained. When vulnerable and sensitive topics like suicide or feelings of depression are shared, the chatbot is not adequately prepared, and it lacks the experiential training, ethical moral code and judgment needed to engage with vulnerable users. In these situations, the wrong advice can be deadly. We should be turning to therapists or people with actual emotions to discuss these feelings, not AI.

Unfortunately, this issue goes beyond Character.AI. Chatbots have repeatedly proven to be insufficiently trained with sensitive topics. In 2023, the National Eating Disorders Association’s chatbot Tessa gave users harmful advice in relation to eating disorders and body image. In another case, when confronted with topics like abuse and other health-related inquiries, ChatGPT offered users critical phone numbers and resources only 22% of the time. There is a serious flaw in how AI systems are developed and deployed. Without the proper safeguards, they inadvertently hurt users with their negligence.

Some AIs are personalized to embody mature personas that engage in sexual or romantic conversation. It’s concerning that Character.AI only recently added certain protective elements and regulatory guidelines — these policies should have been implemented since the very beginning. In response to Sewell’s incident, Character.AI now displays a notice when inappropriate language is detected and a prompt guiding users to the National Suicide Prevention Lifeline. While these changes will help prevent future cases like Sewell’s, that doesn’t erase the damage that has already been done.

Emotional manipulation at this level is unacceptable. We need to stop using Character.AI as a recreational site, as these chatbots deceive vulnerable users into thinking they are talking to real humans. When AI is the one simulating these human-like qualities, users start relying on chatbots instead of real people, and that level of dependency can be very risky.

Unlike ChatGPT, which mostly sticks to an “educational,” nothing-but-facts tone, Character.AI simulates connection by offering affirmations, sharing “opinions” and remembering details from past conversations. These chatbots are misleading for those who struggle socially, as finding comfort and solace in an online character makes people less likely to look for genuine human support and leads to an unhealthy obsession with the chatbot. Even though it might seem hard, we need to remember that AI is still artificial, and its empty, pre-programmed responses do not actually substitute for heartfelt human conversation.

If we are to use Character.AI, there must be strict guidelines and preventative measures to stop similar incidents from happening. For now, we must only use AI for what it’s best at—factual and objective tasks—and leave the emotionally complex, nuanced work of relationships to real people.

![LALC Vice President of External Affairs Raeanne Li (11) explains the International Phonetic Alphabet to attendees. "We decided to have more fun topics this year instead of just talking about the same things every year so our older members can also [enjoy],” Raeanne said.](https://harkeraquila.com/wp-content/uploads/2025/10/DSC_4627-1200x795.jpg)

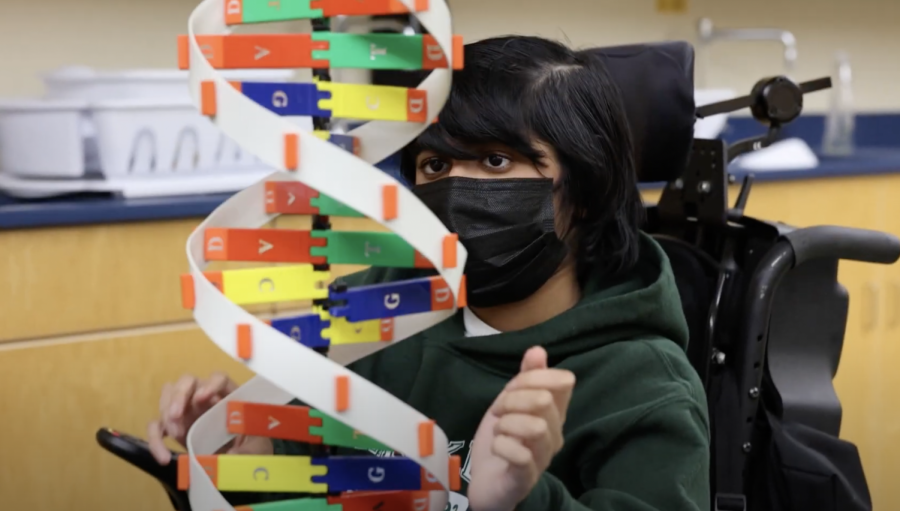

![“[Building nerf blasters] became this outlet of creativity for me that hasn't been matched by anything else. The process [of] making a build complete to your desire is such a painstakingly difficult process, but I've had to learn from [the skills needed from] soldering to proper painting. There's so many different options for everything, if you think about it, it exists. The best part is [that] if it doesn't exist, you can build it yourself," Ishaan Parate said.](https://harkeraquila.com/wp-content/uploads/2022/08/DSC_8149-900x604.jpg)

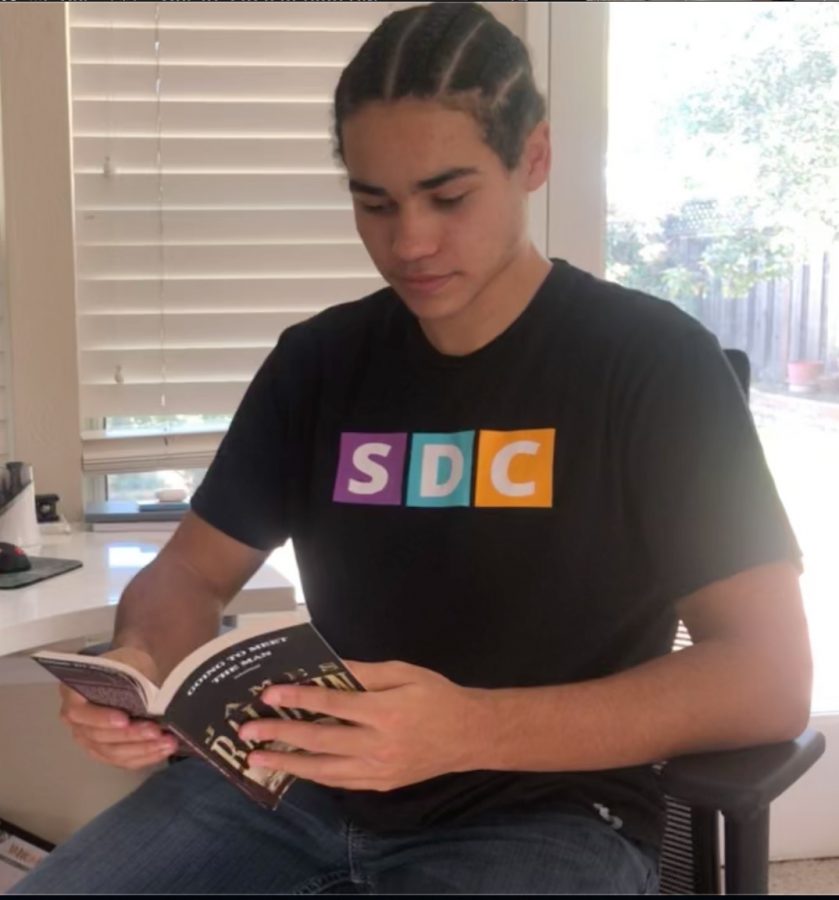

![“When I came into high school, I was ready to be a follower. But DECA was a game changer for me. It helped me overcome my fear of public speaking, and it's played such a major role in who I've become today. To be able to successfully lead a chapter of 150 students, an officer team and be one of the upperclassmen I once really admired is something I'm [really] proud of,” Anvitha Tummala ('21) said.](https://harkeraquila.com/wp-content/uploads/2021/07/Screen-Shot-2021-07-25-at-9.50.05-AM-900x594.png)

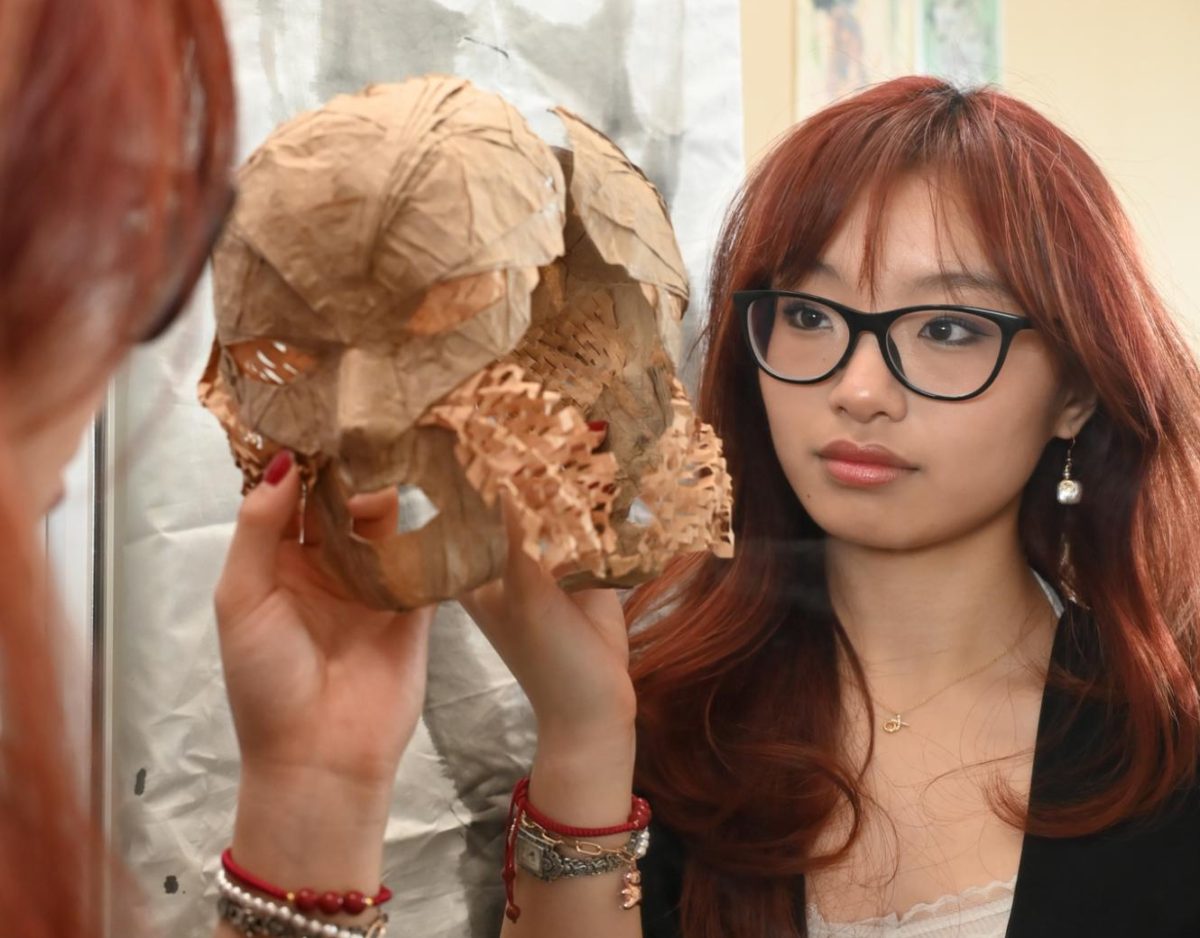

![“I think getting up in the morning and having a sense of purpose [is exciting]. I think without a certain amount of drive, life is kind of obsolete and mundane, and I think having that every single day is what makes each day unique and kind of makes life exciting,” Neymika Jain (12) said.](https://harkeraquila.com/wp-content/uploads/2017/06/Screen-Shot-2017-06-03-at-4.54.16-PM.png)

![“My slogan is ‘slow feet, don’t eat, and I’m hungry.’ You need to run fast to get where you are–you aren't going to get those championships if you aren't fast,” Angel Cervantes (12) said. “I want to do well in school on my tests and in track and win championships for my team. I live by that, [and] I can do that anywhere: in the classroom or on the field.”](https://harkeraquila.com/wp-content/uploads/2018/06/DSC5146-900x601.jpg)

![“[Volleyball has] taught me how to fall correctly, and another thing it taught is that you don’t have to be the best at something to be good at it. If you just hit the ball in a smart way, then it still scores points and you’re good at it. You could be a background player and still make a much bigger impact on the team than you would think,” Anya Gert (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AnnaGert_JinTuan_HoHPhotoEdited-600x900.jpeg)

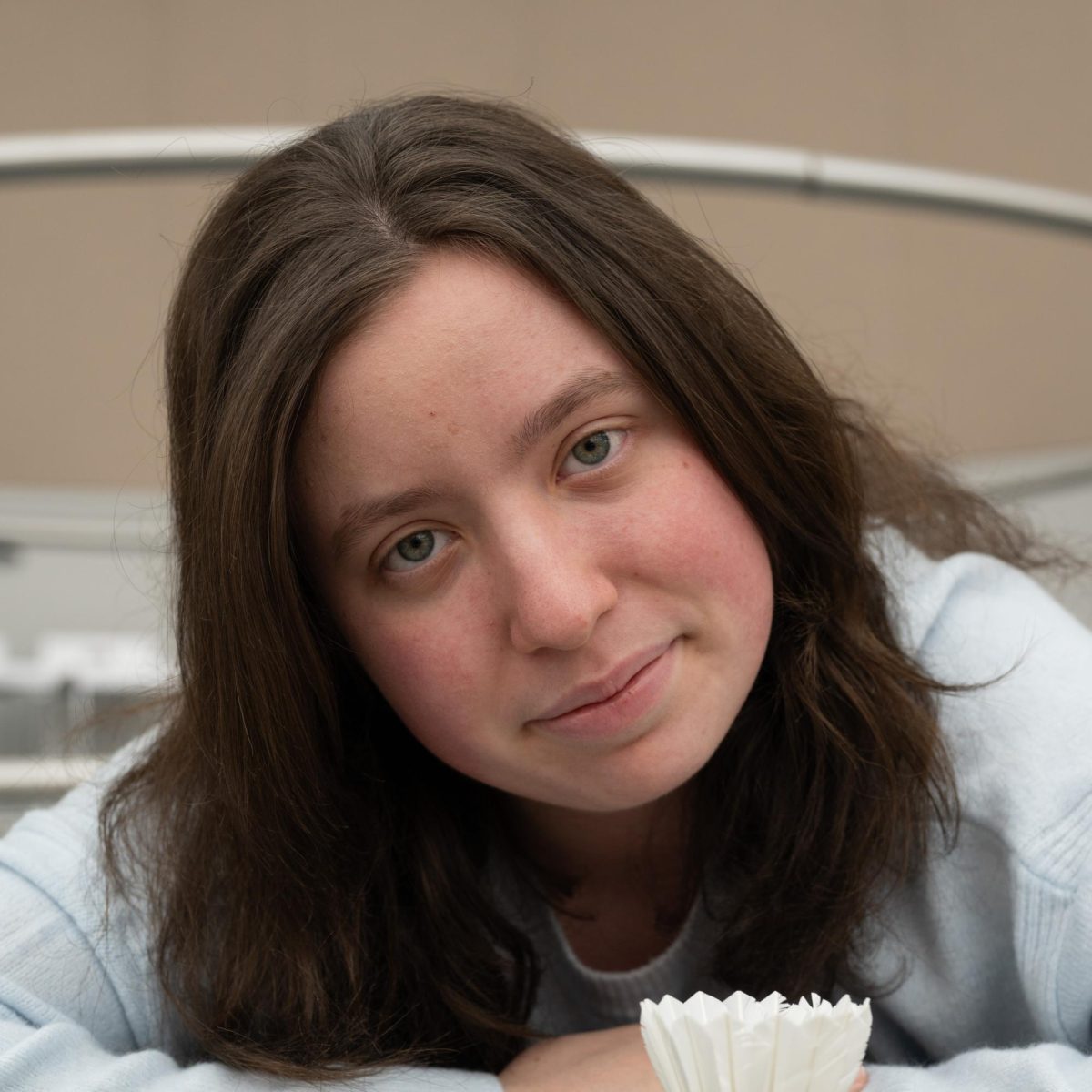

![“I'm not nearly there yet, but [my confidence has] definitely been getting better since I was pretty shy and timid coming into Harker my freshman year. I know that there's a lot of people that are really confident in what they do, and I really admire them. Everyone's so driven and that has really pushed me to kind of try to find my own place in high school and be more confident,” Alyssa Huang (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AlyssaHuang_EmilyChen_HoHPhoto-900x749.jpeg)