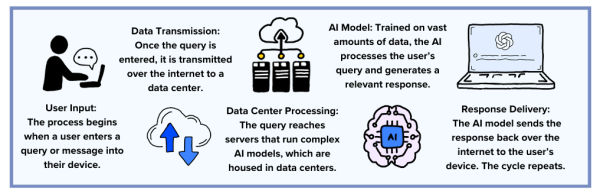

Your fingers flutter across the keyboard. Click. Enter. Bursts of AI-generated text appear, filling the screen in just a few seconds. The answer is instant, but behind this seamless interaction lies a hidden environmental cost.

From behind a screen, people often do not realize the real-world impact of every search. While ChatGPT appears to be just another search engine, many remain unaware of the significant environmental footprint left by large language models (LLMs).

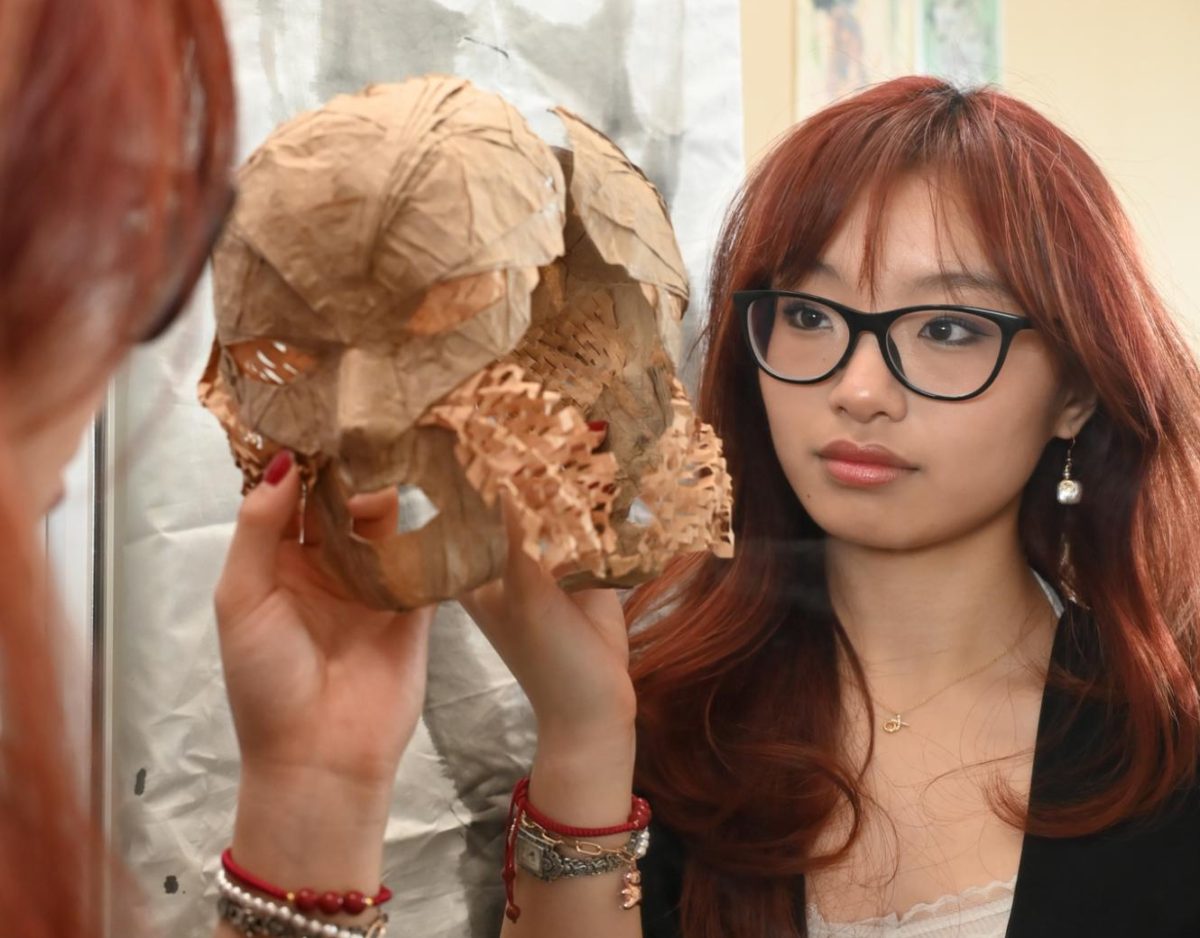

“In eighth grade, our history teacher told us that ChatGPT could be a good starting place for generating ideas, and that was my first exposure to it,” sophomore Abby Wang said. “We’re always talking about [how] ChatGPT can be used for cheating, but we haven’t really focused on the more immediate environmental consequences of using it.”

In data centers all across the world, these LLMs take in tens of millions of queries a day, and in turn consume enormous amounts of energy from the grid and millions of gallons of fresh water from already constrained reservoirs.

LLMs train on trillions of parameters and hundreds of billions of words, requiring far more energy than simply running the model on a user’s input. In the process of training GPT-3, OpenAI’s third generation of the ChatGPT LLM, the model consumed 1,287 megawatt-hours of electricity. As a result, it emitted the equivalent amount of carbon dioxide as 123 gasoline-powered automobiles in one year. Running the model millions of times uses more than half a million kilowatt-hours a day, nearly the same amount of electricity used by 180,000 households.

“If [the models] aren’t pulling from clean or renewable sources, then they might be pulling from fossil fuel sources,” Honors Systems Science teacher Chris Spenner said. “That has an impact regardless, and increasing our energy footprint is just kind of the wrong direction to go at a time when we’re looking at population growth everywhere, increased demand and more consumption.”

As the computational power of LLMs grows exponentially with new innovations, the environmental impact also grows even further, both in terms of carbon emissions and water usage. Large-scale uses of these models also have detrimental effects on water systems. A typical user interaction, which lasts between 10 and 50 questions, uses up 500 mL of water.

“If there’s increased demand on hydropower, then we’re building more dams and that has an impact on the environment,” Spenner said. “When water is pulled from a source for cooling, the water doesn’t necessarily just disappear; the bulk flow of the water is redirected back into the environment with all kinds of contaminants. The water itself heats up, so that’s feeding back into our natural water systems and disrupting ecosystems.”

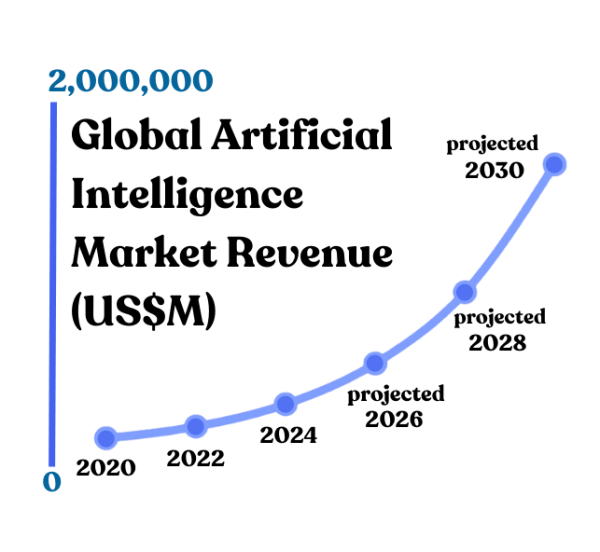

As more people turn to ChatGPT and other AI tools like Google’s Gemini and Anthropic’s Claude, increased usage poses the increased threat of droughts. Global AI demand is projected to rise exponentially to over $1.5 trillion by 2030. Many data centers that power AI systems operate in areas facing water scarcity like Arizona and California, which already suffer from long-term droughts.

Lauren Weston, Executive Director of Palo Alto-based environmental nonprofit Acterra, explains how increased water usage by AI in places with frequent dry spells could affect more than just water availability.

“We already know that the state of California and most states don’t efficiently house water during wet seasons,” Weston said. “So we don’t have a way of keeping water long term that doesn’t go away when it’s really hot. That is a huge concern that directly impacts the planet and the increasing wildfires that we’re all experiencing because our forests are drier, the ground is drier, so it’s producing drier natural habitats.”

To keep supercomputers cool, data centers require large amounts of the same fresh water essential to human consumption, which could otherwise support homes, parks and natural habitats. Yet water usage has been a longstanding issue for tech companies long before AI use became widespread. According to company sustainability reports, tech giants like Google and Microsoft reported significant increases in water consumption from 2021 to 2022. Google’s water usage jumped by 21%, while Microsoft’s spiked by nearly 35%.

Acknowledging these concerns, large companies are trying to implement “water positive” policies, which aim to conserve and restore more water than used and cut down on water usage by 2030. Google pledged to replenish 120% of the water it consumes, and Microsoft too has invested in large replenishment projects around the world. However, implementing these strategies on a long-term scale is likely unfeasible, especially without sacrificing the companies’ growth and efficiency.

The key to tackling the water scarcity crisis rests with raising public awareness about the truth behind water consumption. Weston stresses that without a clear understanding of how much water is being used to sustain AI infrastructure, communities cannot effectively make meaningful change.

“There’s a growing subset, which is the younger generations, that are growing up with [ChatGPT] as an accessible tool that won’t know any different,” Weston said. “Part of this environmental connection is making sure that people know what is going on in their communities. The more people know where their water is going, where their energy is going, the more likely it is that folks will be making really informed decisions.”

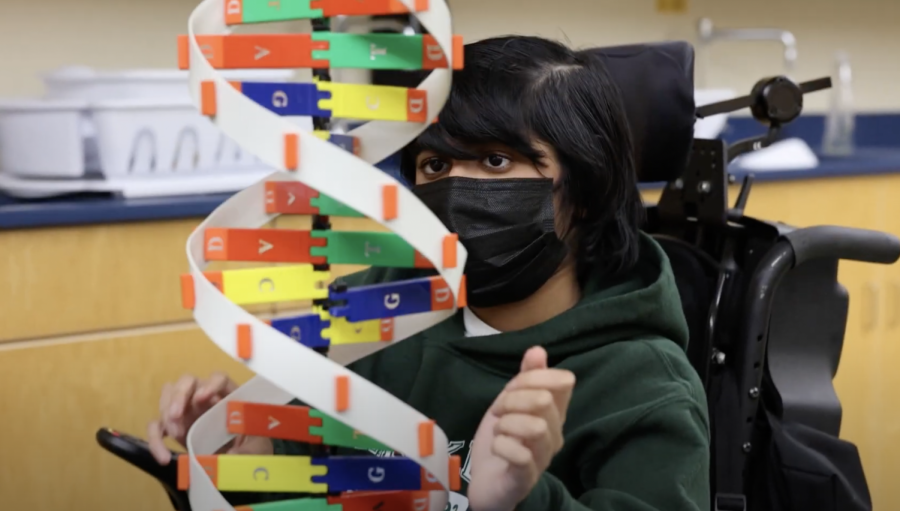

Junior Aditya Shivakumar, founder and president of HopeAI, an organization that seeks to use AI to address environmental challenges, acknowledged that large-scale usage of the technology may have detrimental effects. However, he also believed that the potential of AI would allow for permanent solutions to the climate crisis in the future.

“AI in terms of the environment is a double sword: on one end, there are environmental costs like requiring a lot of energy to run,” Aditya said. “But at the same time, it can also help with environmental causes. For example, AI can help with low-cost techniques for pollution tracking in oceans and ecosystems. There are a lot of new applications powered by AI that will be able to help us with the energy problem in the long run.”

![“[Building nerf blasters] became this outlet of creativity for me that hasn't been matched by anything else. The process [of] making a build complete to your desire is such a painstakingly difficult process, but I've had to learn from [the skills needed from] soldering to proper painting. There's so many different options for everything, if you think about it, it exists. The best part is [that] if it doesn't exist, you can build it yourself," Ishaan Parate said.](https://harkeraquila.com/wp-content/uploads/2022/08/DSC_8149-900x604.jpg)

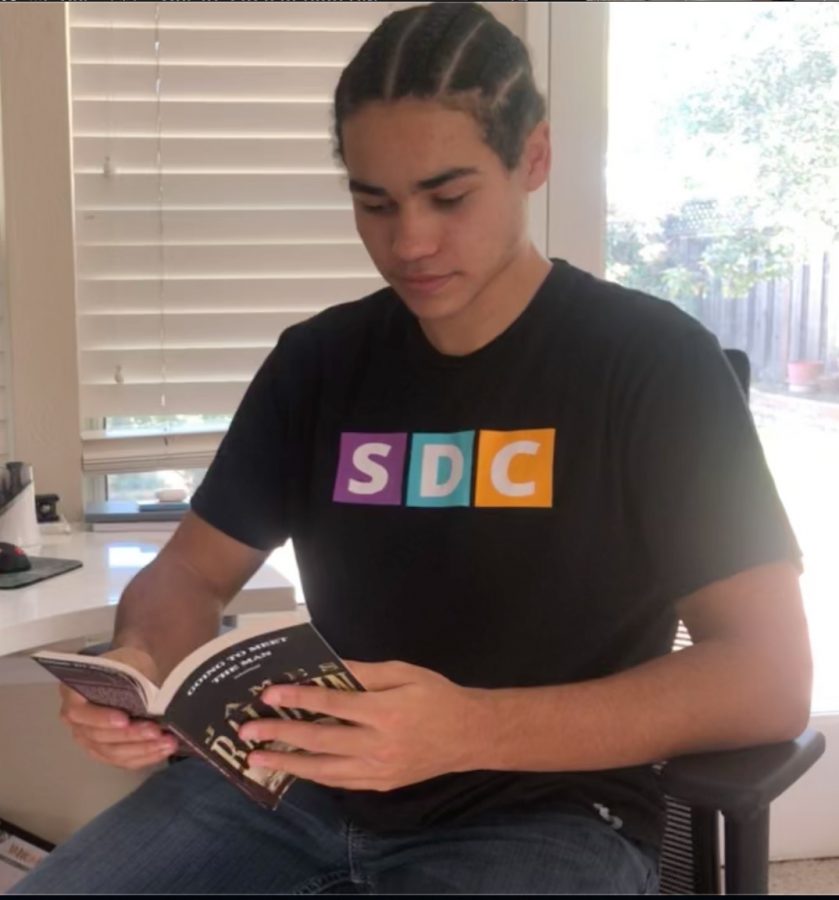

![“When I came into high school, I was ready to be a follower. But DECA was a game changer for me. It helped me overcome my fear of public speaking, and it's played such a major role in who I've become today. To be able to successfully lead a chapter of 150 students, an officer team and be one of the upperclassmen I once really admired is something I'm [really] proud of,” Anvitha Tummala ('21) said.](https://harkeraquila.com/wp-content/uploads/2021/07/Screen-Shot-2021-07-25-at-9.50.05-AM-900x594.png)

![“I think getting up in the morning and having a sense of purpose [is exciting]. I think without a certain amount of drive, life is kind of obsolete and mundane, and I think having that every single day is what makes each day unique and kind of makes life exciting,” Neymika Jain (12) said.](https://harkeraquila.com/wp-content/uploads/2017/06/Screen-Shot-2017-06-03-at-4.54.16-PM.png)

![“My slogan is ‘slow feet, don’t eat, and I’m hungry.’ You need to run fast to get where you are–you aren't going to get those championships if you aren't fast,” Angel Cervantes (12) said. “I want to do well in school on my tests and in track and win championships for my team. I live by that, [and] I can do that anywhere: in the classroom or on the field.”](https://harkeraquila.com/wp-content/uploads/2018/06/DSC5146-900x601.jpg)

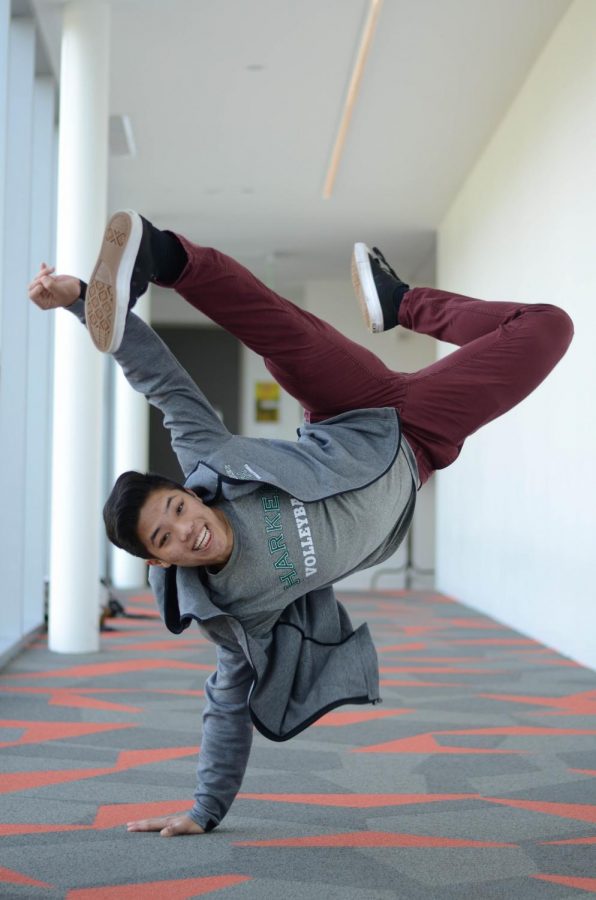

![“[Volleyball has] taught me how to fall correctly, and another thing it taught is that you don’t have to be the best at something to be good at it. If you just hit the ball in a smart way, then it still scores points and you’re good at it. You could be a background player and still make a much bigger impact on the team than you would think,” Anya Gert (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AnnaGert_JinTuan_HoHPhotoEdited-600x900.jpeg)

![“I'm not nearly there yet, but [my confidence has] definitely been getting better since I was pretty shy and timid coming into Harker my freshman year. I know that there's a lot of people that are really confident in what they do, and I really admire them. Everyone's so driven and that has really pushed me to kind of try to find my own place in high school and be more confident,” Alyssa Huang (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AlyssaHuang_EmilyChen_HoHPhoto-900x749.jpeg)