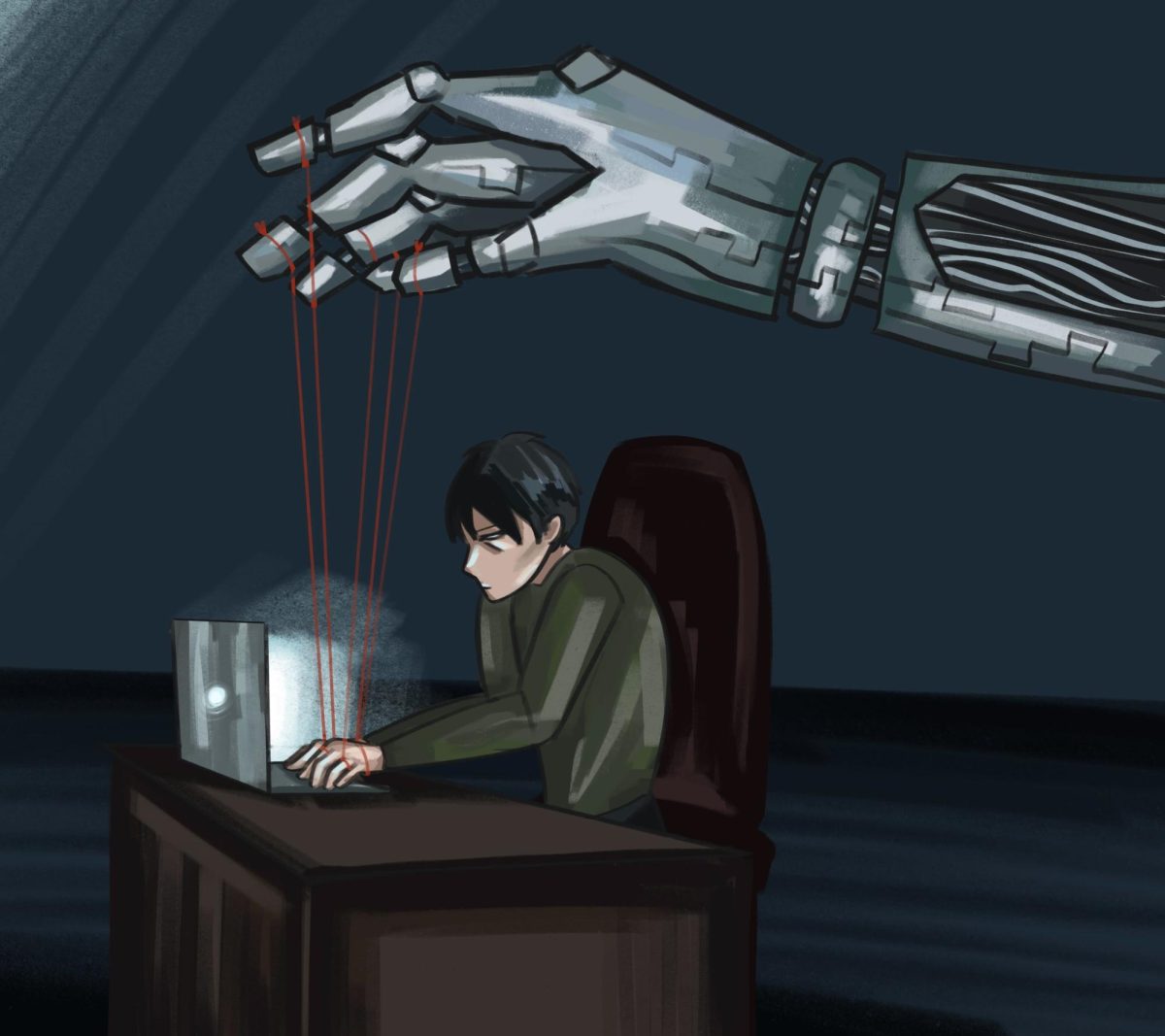

Large language models (LLMs) experienced a massive rise in popularity in 2022 with the release of ChatGPT. Three years later, more people have access to LLMs than ever, and many students often turn to them for brainstorming essay ideas or polishing grammar and word choice. However, this ubiquity raises concerns that the convenience of these tools comes at the cost of learning and originality.

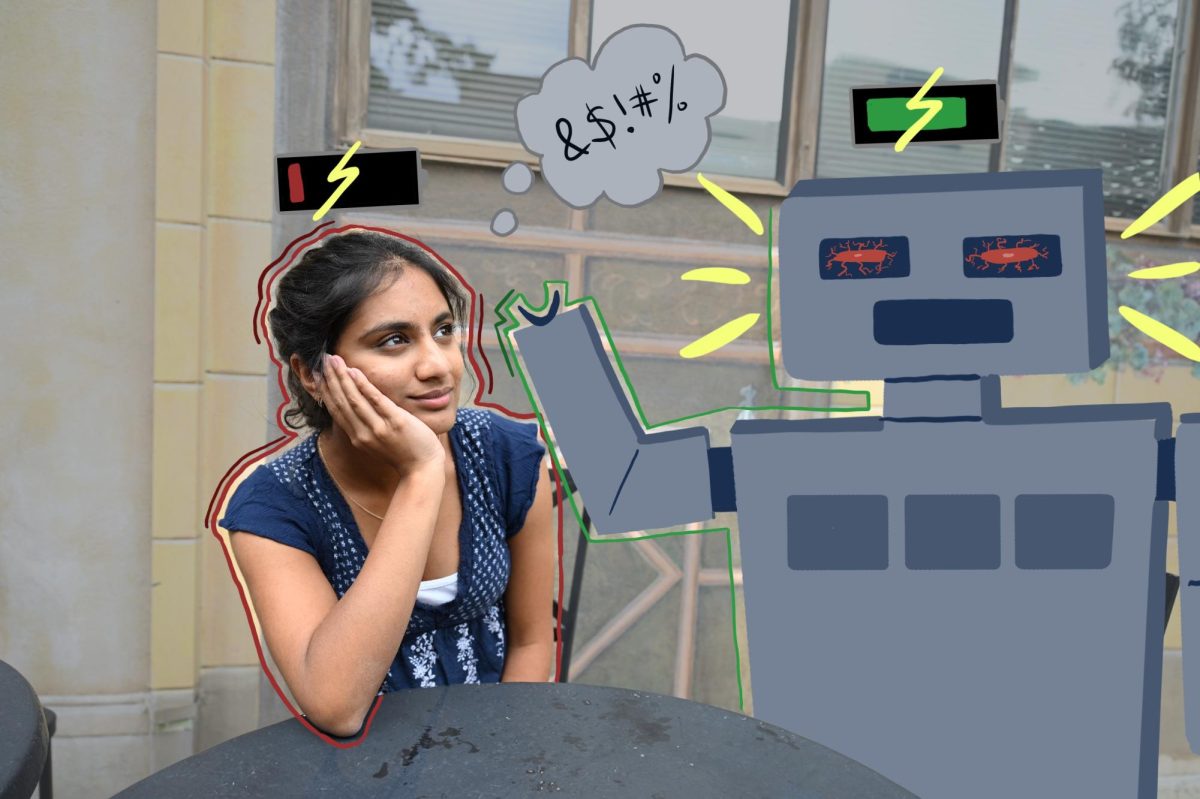

Language and Linguistics Club president and LLM researcher Linda Zeng (12) recognizes that human writing may eventually mimic LLMs as a result of their prominence.

“For people who use LLMs a lot, even when they stop using it, LLMs are almost their teacher and have taught them to write in this certain style,” Linda said. “20 to 50 years from now, our kids will definitely have a more LLM-like writing style if things keep going the way they are right now.”

AI-generated writing in LLM outputs often relies on patterns like frequent em dashes, lists of three, repetitive sentence structures and buzzwords like “multifaceted” and “transformative” that sound impressive but add little substance.

English teacher Christopher Hurshman comments on AI’s inability to write like a student who is truly invested in a specific topic.

“AI writing currently tends to be pretty generic, and it struggles to offer specific or sharp or insightful commentary,” Hurshman said. “It’s also difficult for it to link a series of observations to one another. [AI models] are not really designed to do synthetic reasoning or creative thinking. They’re designed to replicate the structure of language but not necessarily the thoughts.”

Students mainly turn to AI because it saves time and effort, able to produce passable text in seconds. The platforms are simple to use, difficult to detect and seem to eliminate the frustrating challenges that occur in the writing process. However, this reliance introduces broader problems for how students experience writing.

“People have a lower frustration tolerance, and they’re more likely to resort to AI tools right away because they don’t think it should take this time,” Hurshman said. “That’s premised on a mistake about what learning looks like and what growth looks like. The danger of AI is that it sells you this promise that you can write without struggle, but the learning takes place in those moments of struggle.”

Some students recognize the importance of that struggle and prefer to take the hard route. Junior Jayden Liu refuses to use AI as a shortcut for his writing, preferring instead to complete all of his writing assignments on his own.

“While I understand that some people use AI to generate ideas or fix their writing, it’s important for me to struggle through the process myself, even if that means making mistakes or taking more time,” Jayden said. “Writing is about learning how to communicate and think clearly. If I used AI to do all of the work for me, I’d miss out on the chance to improve.”

Hurshman also worries that AI can suppress students’ originality, as these models’ refined yet generic outputs can push students to more uniform ways of writing and thinking.

“[AI] is especially problematic for anyone who’s insecure about their ideas, which is most teens, since you’re just learning how to be original thinkers,” Hurshman said. “It takes a while for you to grow in confidence as an original thinker. In that sense, the generative AI outputs have the same problem as being exposed too early to expert opinions. You’re going to stop your creative thinking and just substitute their ideas.”

Linda echoes this sentiment, noticing that many of her classmates turn to AI as a replacement for their own critical thinking process.

“So many people, instead of just relying on their own intuition, directly put data into ChatGPT and ask for an analysis,” Linda said. “That’s very worrying, because it’s both that people aren’t learning to have critical thinking, and also that there’s the problem of homogenization where many people might have had different ideas, but now everyone is tending to have the same idea.”

Hurshman believes that neither banning AI entirely nor policing its usage addresses the challenge of overreliance on AI. Instead, he argues that the most effective approach is to empower students to actively choose authenticity.

“If you want students to care about their writing, you have to acknowledge that AI exists in the world, and then you have to convince them that the kind of writing that you’re offering in your class is way better,” Hurshman said. “If you can convince a Harker student that actually their writing is way better than what an AI can do, and their ideas are way better than what an AI can do, then it’s motivating.”

![LALC Vice President of External Affairs Raeanne Li (11) explains the International Phonetic Alphabet to attendees. "We decided to have more fun topics this year instead of just talking about the same things every year so our older members can also [enjoy],” Raeanne said.](https://harkeraquila.com/wp-content/uploads/2025/10/DSC_4627-1200x795.jpg)

![“[Building nerf blasters] became this outlet of creativity for me that hasn't been matched by anything else. The process [of] making a build complete to your desire is such a painstakingly difficult process, but I've had to learn from [the skills needed from] soldering to proper painting. There's so many different options for everything, if you think about it, it exists. The best part is [that] if it doesn't exist, you can build it yourself," Ishaan Parate said.](https://harkeraquila.com/wp-content/uploads/2022/08/DSC_8149-900x604.jpg)

![“When I came into high school, I was ready to be a follower. But DECA was a game changer for me. It helped me overcome my fear of public speaking, and it's played such a major role in who I've become today. To be able to successfully lead a chapter of 150 students, an officer team and be one of the upperclassmen I once really admired is something I'm [really] proud of,” Anvitha Tummala ('21) said.](https://harkeraquila.com/wp-content/uploads/2021/07/Screen-Shot-2021-07-25-at-9.50.05-AM-900x594.png)

![“I think getting up in the morning and having a sense of purpose [is exciting]. I think without a certain amount of drive, life is kind of obsolete and mundane, and I think having that every single day is what makes each day unique and kind of makes life exciting,” Neymika Jain (12) said.](https://harkeraquila.com/wp-content/uploads/2017/06/Screen-Shot-2017-06-03-at-4.54.16-PM.png)

![“My slogan is ‘slow feet, don’t eat, and I’m hungry.’ You need to run fast to get where you are–you aren't going to get those championships if you aren't fast,” Angel Cervantes (12) said. “I want to do well in school on my tests and in track and win championships for my team. I live by that, [and] I can do that anywhere: in the classroom or on the field.”](https://harkeraquila.com/wp-content/uploads/2018/06/DSC5146-900x601.jpg)

![“[Volleyball has] taught me how to fall correctly, and another thing it taught is that you don’t have to be the best at something to be good at it. If you just hit the ball in a smart way, then it still scores points and you’re good at it. You could be a background player and still make a much bigger impact on the team than you would think,” Anya Gert (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AnnaGert_JinTuan_HoHPhotoEdited-600x900.jpeg)

![“I'm not nearly there yet, but [my confidence has] definitely been getting better since I was pretty shy and timid coming into Harker my freshman year. I know that there's a lot of people that are really confident in what they do, and I really admire them. Everyone's so driven and that has really pushed me to kind of try to find my own place in high school and be more confident,” Alyssa Huang (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AlyssaHuang_EmilyChen_HoHPhoto-900x749.jpeg)