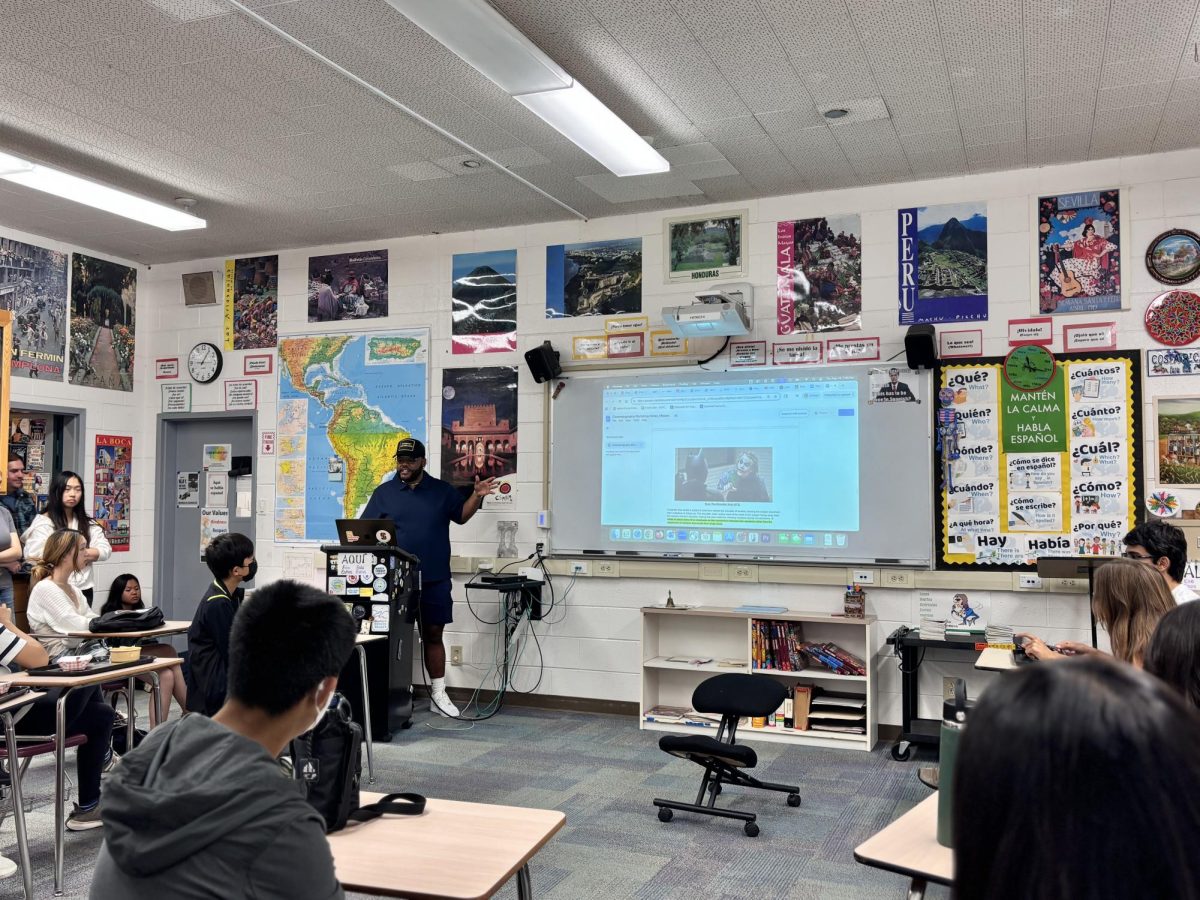

University of California, Santa Cruz Professor Yi Zhang presented her award-winning research in Natural Language Processing, Multimodality and Information Retrieval in Chris Spenner’s Room last Friday from 3:15 to 4:15 p.m.

The session began with Research Club Officer Linda Zeng (10) introducing Dr. Zhang’s background. Linda met Dr. Zhang in the California State Summer School for Mathematics and Science program, where she was the main professor teaching Cluster Eleven, a class focused on artificial intelligence.

“The way she frames explaining machine learning to people who aren’t familiar with it is really good,” Linda said. “First as a mathematical function and then as a human brain — I’ll definitely use some of her ideas when I try to explain my research to other people.”

To begin the presentation, Dr. Zhang dove into the intricacies behind AI language models, types used by ChatGPT and other conversation bots. Before responding, a language model must first understand natural human language. The program converts sentences into structured representations and deals with variations in structure as well as ambiguities, such as similar sentences with different meanings.

Dr. Zhang elaborated on the natural language generation portion of the language model, outlining the two main types: retrieval-based and generation-based. While retrieval-based models compare inputs with a database of predefined responses, generation-based models can dynamically generate responses, either through filling the gaps of a template or through “sequence-to-sequence”-based machine learning, where the model learns how to respond to inputs through training.

Focusing on the adaptive capabilities of AI, Dr. Zhang explained how “computational” neurons drive machine learning. Imitating the function of biological neurons, computational neurons process input-output pairs and learn to recognize patterns. Given enough data, a network of neurons can formulate a function to respond to any prompt. Dr. Zhang compared the function to how humans determine a table is a table.

“Large-scale language models can be used to write stories, write poems, answer questions, translate languages and even more,” Dr. Zhang said. “Imagine if you have read all the books in the world and all the documents on the Internet, what will you be? Those neural networks have done that, and they trained their model that way.”

Dr. Zhang also mentioned the possible impacts of AI models on society, including on employment, education and entertainment. Although these AI programs may offer assistance, she also addressed concerns surrounding AI seizing jobs and creative roles in the future. Daniel Wu (10), who attended the lecture, comments on the impacts of AI.

“I don’t think AI is going to take over society, and we’re all going to die, but I think a lot of jobs will be replaced,” Daniel said. “The necessity of work is going to shift, and a lot of labor is going to become doable by AI.”

Transitioning to research advice, Dr. Zhang cautioned the audience about the intense critique of research journal editing committees. She recommended that they revise and resubmit papers if they did not get accepted, stressing that many highly regarded papers in the scientific community underwent multiple rounds of rejection before finally being published.

The presentation ended with a Q&A segment where Dr. Zhang responded to questions about her experience in research labs and requests for advice on submitting to conferences. The audience also inquired into the possible uses and limitations of AI.

“There are certain things that AI cannot replace,” Dr. Zhang said. “Initially, people think that creativity will not be replaced, but it will. I think it’ll be like 60% done by AI and 40% done by humans. In the field, we call it ‘co-pilot’ instead of ‘pilot’, because in the end, AI still makes mistakes.”

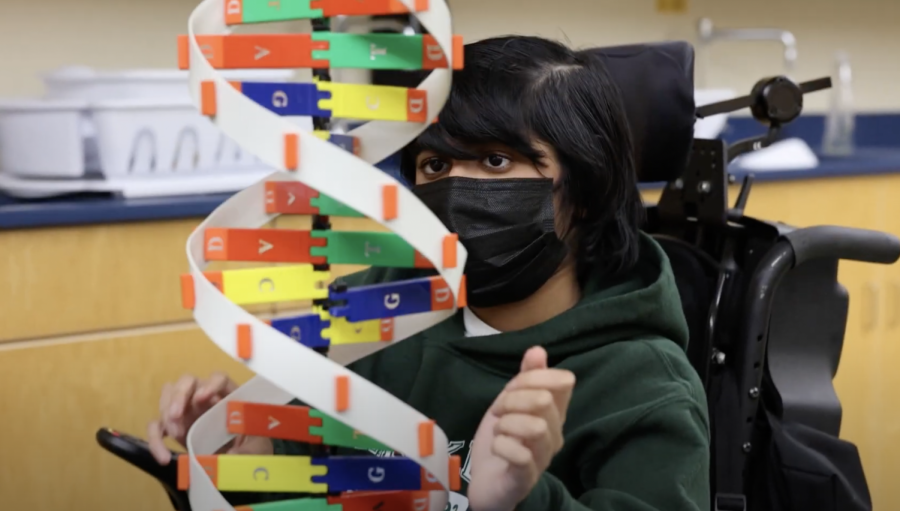

![“[Building nerf blasters] became this outlet of creativity for me that hasn't been matched by anything else. The process [of] making a build complete to your desire is such a painstakingly difficult process, but I've had to learn from [the skills needed from] soldering to proper painting. There's so many different options for everything, if you think about it, it exists. The best part is [that] if it doesn't exist, you can build it yourself," Ishaan Parate said.](https://harkeraquila.com/wp-content/uploads/2022/08/DSC_8149-900x604.jpg)

![“When I came into high school, I was ready to be a follower. But DECA was a game changer for me. It helped me overcome my fear of public speaking, and it's played such a major role in who I've become today. To be able to successfully lead a chapter of 150 students, an officer team and be one of the upperclassmen I once really admired is something I'm [really] proud of,” Anvitha Tummala ('21) said.](https://harkeraquila.com/wp-content/uploads/2021/07/Screen-Shot-2021-07-25-at-9.50.05-AM-900x594.png)

![“I think getting up in the morning and having a sense of purpose [is exciting]. I think without a certain amount of drive, life is kind of obsolete and mundane, and I think having that every single day is what makes each day unique and kind of makes life exciting,” Neymika Jain (12) said.](https://harkeraquila.com/wp-content/uploads/2017/06/Screen-Shot-2017-06-03-at-4.54.16-PM.png)

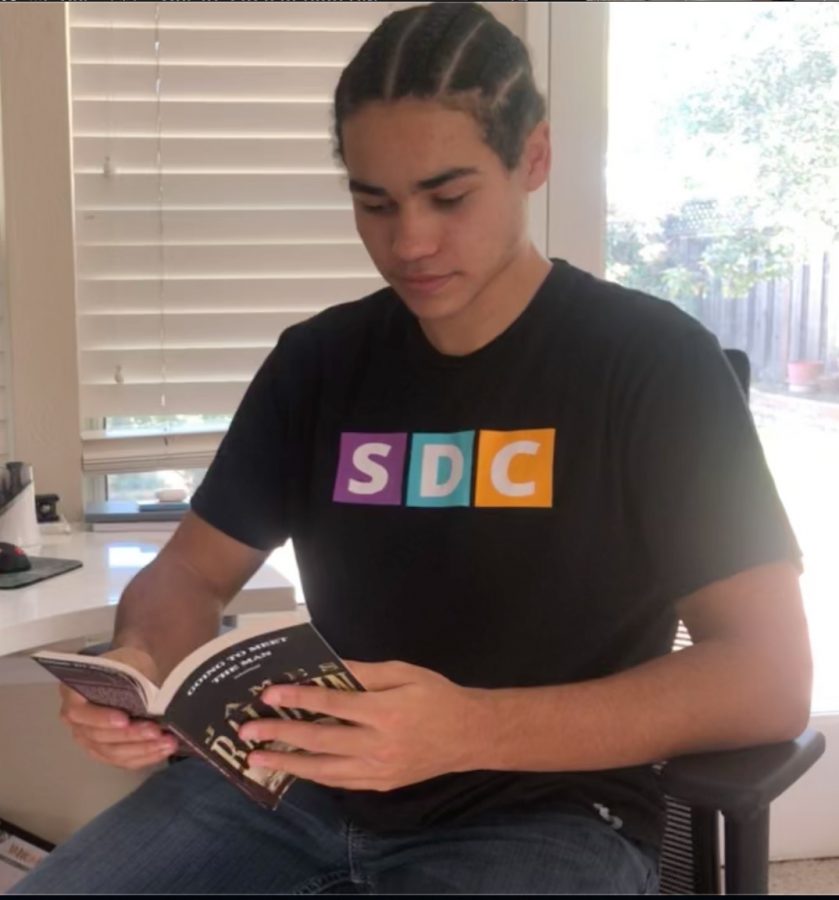

![“My slogan is ‘slow feet, don’t eat, and I’m hungry.’ You need to run fast to get where you are–you aren't going to get those championships if you aren't fast,” Angel Cervantes (12) said. “I want to do well in school on my tests and in track and win championships for my team. I live by that, [and] I can do that anywhere: in the classroom or on the field.”](https://harkeraquila.com/wp-content/uploads/2018/06/DSC5146-900x601.jpg)

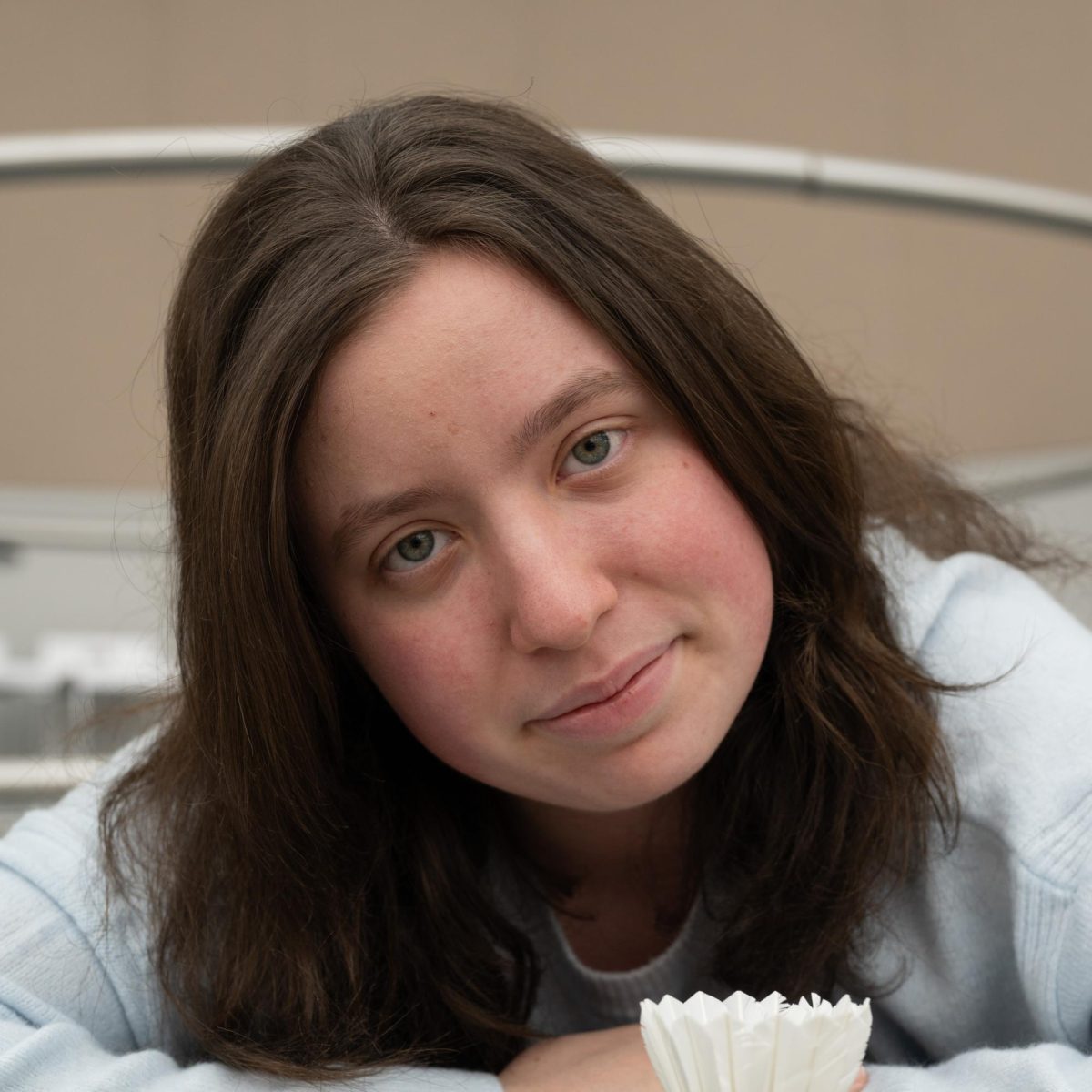

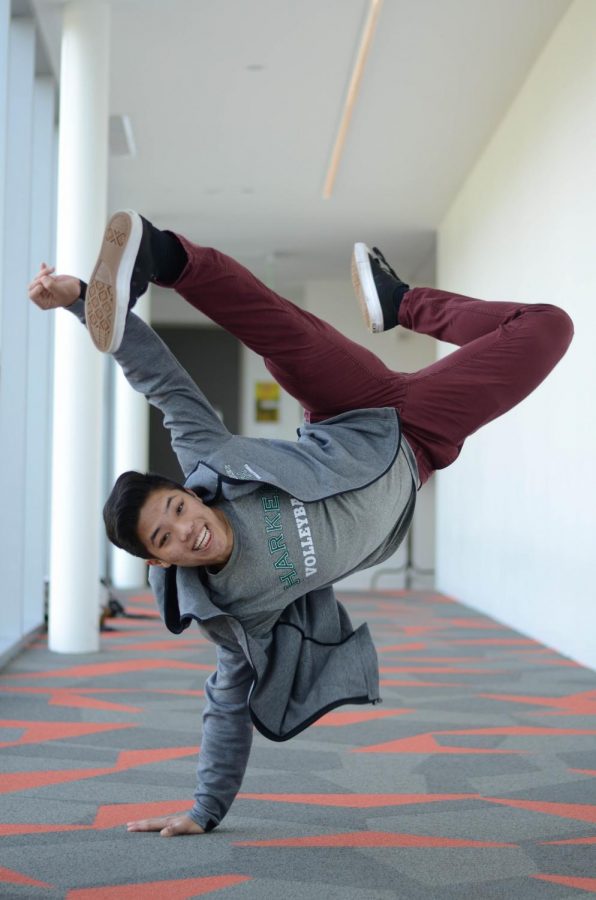

![“[Volleyball has] taught me how to fall correctly, and another thing it taught is that you don’t have to be the best at something to be good at it. If you just hit the ball in a smart way, then it still scores points and you’re good at it. You could be a background player and still make a much bigger impact on the team than you would think,” Anya Gert (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AnnaGert_JinTuan_HoHPhotoEdited-600x900.jpeg)

![“I'm not nearly there yet, but [my confidence has] definitely been getting better since I was pretty shy and timid coming into Harker my freshman year. I know that there's a lot of people that are really confident in what they do, and I really admire them. Everyone's so driven and that has really pushed me to kind of try to find my own place in high school and be more confident,” Alyssa Huang (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AlyssaHuang_EmilyChen_HoHPhoto-900x749.jpeg)