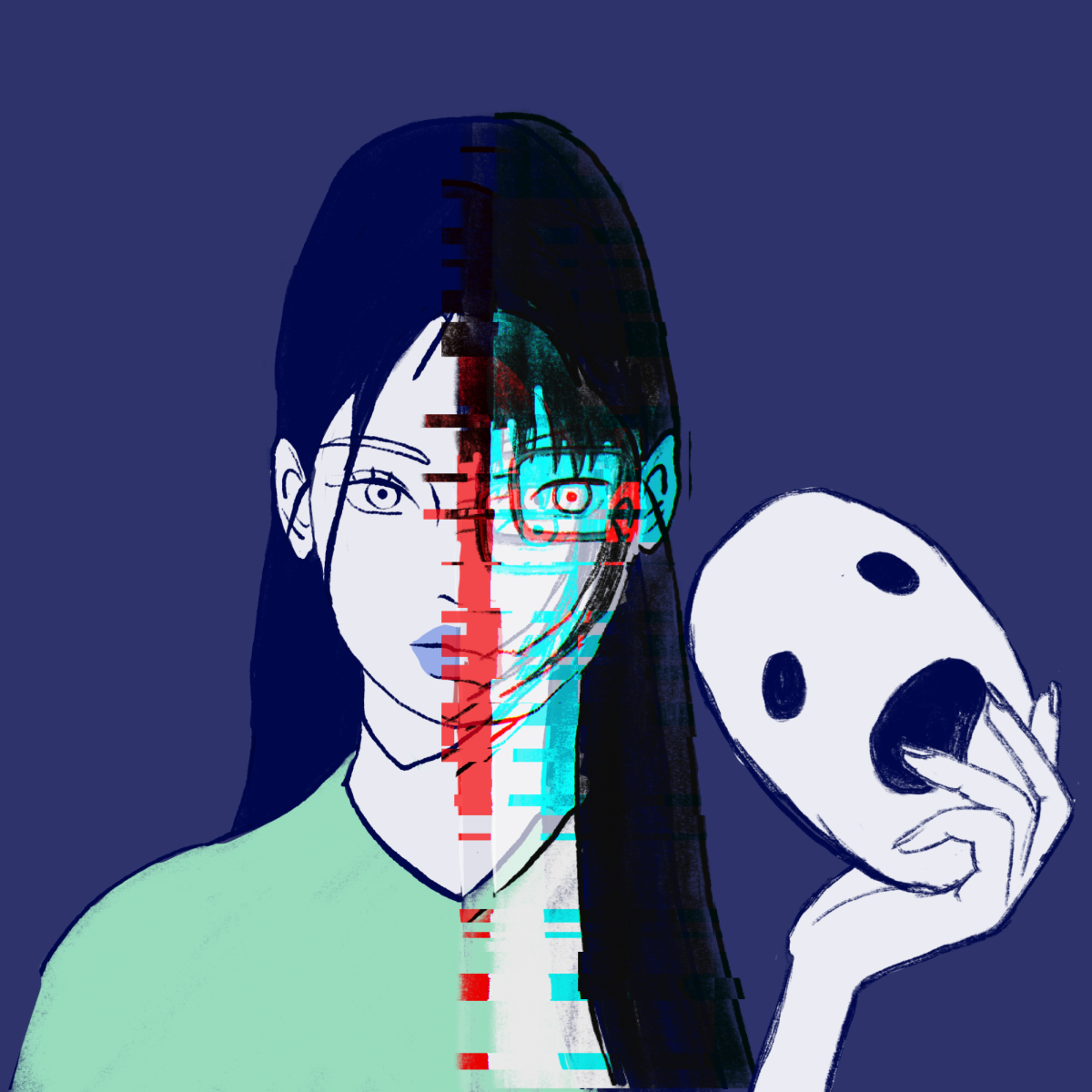

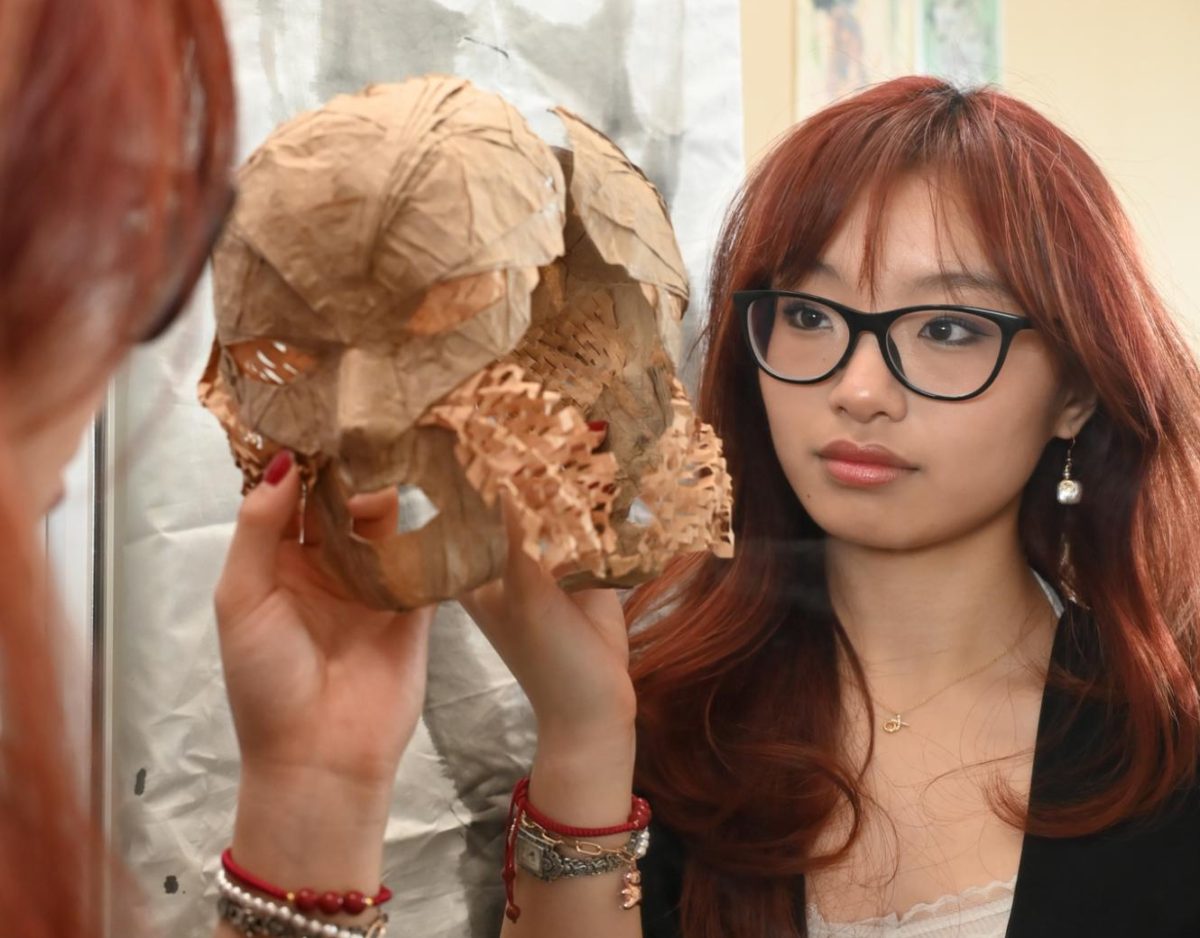

Seeing something can no longer mean believing it. A decade ago, the concept of Artificial Intelligence (AI) manipulating human actions sounded far-fetched. Today, deepfake technology makes this idea a plausible reality.

Deepfakes are a type of deep AI that can synthetically generate convincing videos by manipulating facial features and voices. The danger associated with this AI comes from its susceptibility to being abused. The public consumes a plethora of information from videos on social platforms and news outlets, which could easily spread false information.

With the 2024 elections approaching, deepfake technology could have major implications for political campaigns. During the volatile election season, proliferated AI-generated content can sway public opinion and tarnish public figures’ reputations. In 2017, a fake video of former President Barack Obama constructed by American actor and director Jordan Peele surfaced. The realistic deepfake of Obama mirrored every one of Peele’s facial movements with surgical precision. While this video was created without hostile intent, several malicious versions soon followed. Last year, a hacker posted a video of the Ukrainian president calling on his soldiers to lay down their weapons and return to their families. People easily recognized this deep fake and social media platforms removed it, but the hoax still caused mass confusion on Facebook, YouTube and Twitter.

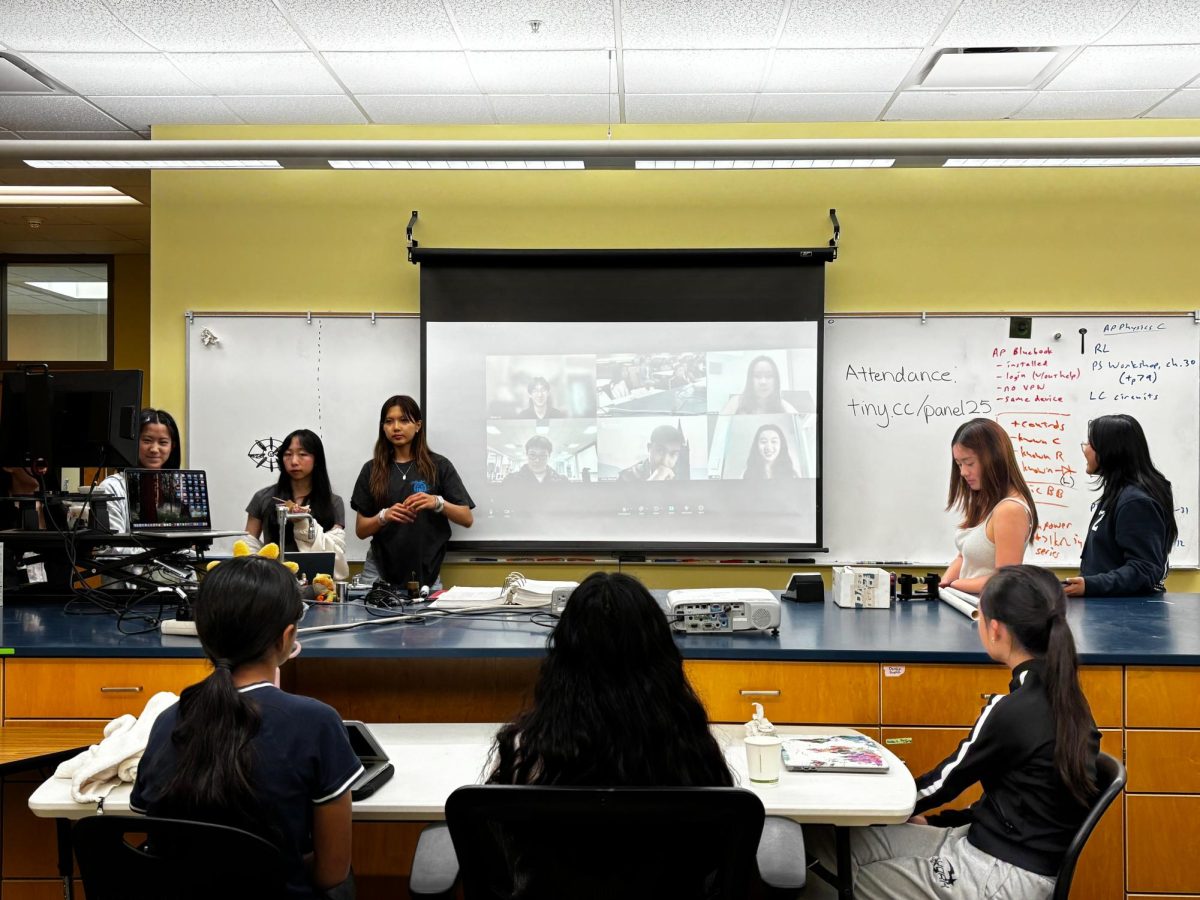

Most recently, President Biden has been the target of many deepfake programmers. In early February, a video of him calling for the mandatory drafting of American troops to fight in Ukraine was doctored, enraging the general public. After the truth was exposed, the video shed light on the growing issue of deepfake technology. President of Programming Club Joe Li (12) underscores the importance of validating information in these changing times.

“On several social media platforms, there are community notes or other mechanisms where the audience can tell whether a video is real or fake,” Joe said. “If the community collectively agrees that a video is fake, then we can counter the spread of misinformation.”

AI-generated media is becoming dangerously harder to distinguish from real content. Paradoxically, research conducted to detect differences actually helps the AI perform better. As soon as a well-intentioned researcher publishes a study pointing out a flaw in the deep learning networks, deepfake creators promptly correct the issue. In 2018, researchers published a paper describing the intricacies of human blinking. They intended to help the general public discern which videos were fake by paying attention to blinking patterns, but this approach backfired. Instead of helping people protect themselves from falling prey to propagated information, the study allowed programmers to improve the model, and the following deepfakes were much more realistic.

In addition to their increasing sophistication, deepfakes are becoming easier to create. In the past, one would need a facial recognition algorithm and an autoencoder. Now, anyone can build a reasonably realistic generated video through integrated software and applications. All a user has to do is provide some form of training data.

The increased accessibility of deepfake technology makes it a bigger threat. Even a single compromising video has the potential to harm someone’s career. Joe believes that people will inevitably abuse that power.

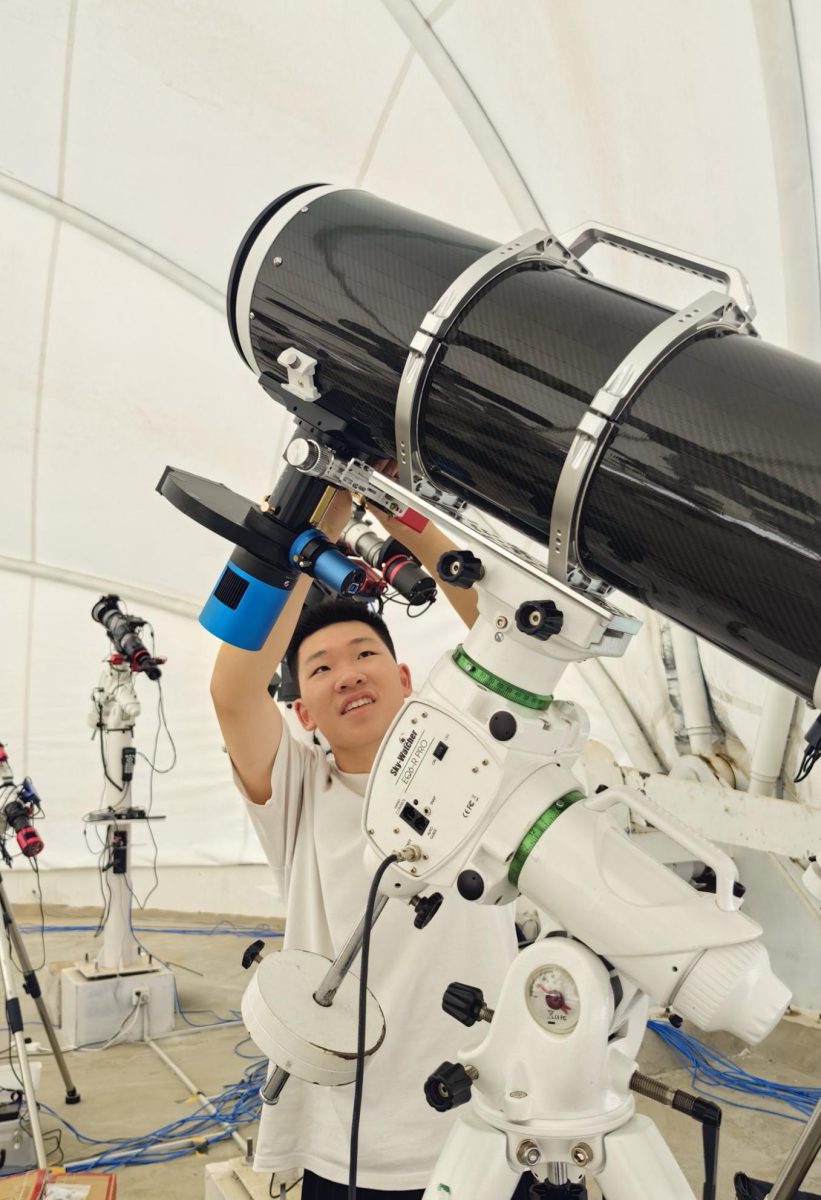

“I don’t see too many benefits of deepfake because you’re creating something that’s not actually real,” Joe said. “But I think it does show how far AI has come. If we use our knowledge of AI in other fields, then we can bring a lot more advancements.”

The emergence of deepfake technology has some potential to help society. It is becoming increasingly utilized in the film industry, giving directors the chance to explore new realms. Many popular media franchises like the Marvel Cinematic Universe are beginning to test early forms of this AI to de-age actors or create hyper-realistic visual effects. As deepfake continues to impact the movie industry, this technology could potentially replace the need for actors to join every shoot. There has also been speculation that dead actors could be ‘re-cast’ in new movies through these deep generative models. Of course, legality and ethics are major factors in determining the practicality of these new applications of deepfake technology. Actors are becoming increasingly concerned about being potentially “replaced” by this technology, prompting measures such as the SAG-AFTRA strike against the Alliance of Motion Picture and Television Producers.

AI has come a long way in recent years. Now, the question remains, is this innovation for better or for worse?

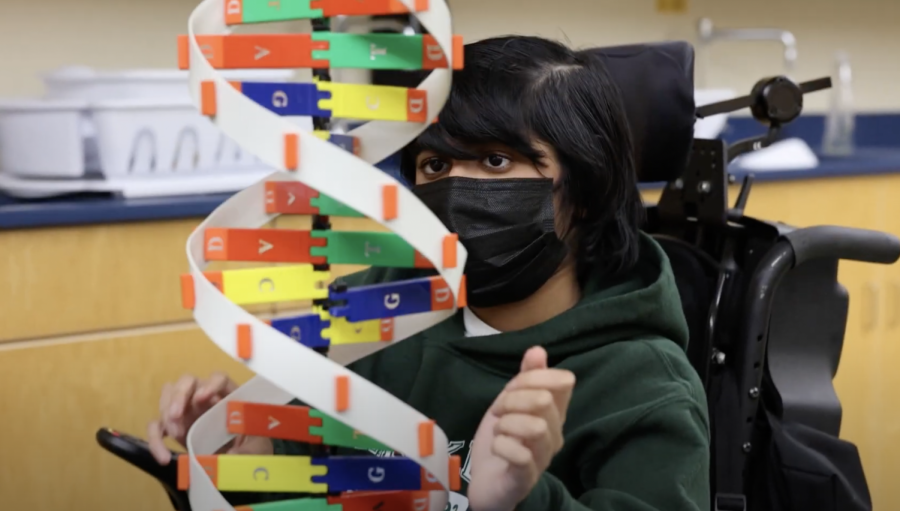

![“[Building nerf blasters] became this outlet of creativity for me that hasn't been matched by anything else. The process [of] making a build complete to your desire is such a painstakingly difficult process, but I've had to learn from [the skills needed from] soldering to proper painting. There's so many different options for everything, if you think about it, it exists. The best part is [that] if it doesn't exist, you can build it yourself," Ishaan Parate said.](https://harkeraquila.com/wp-content/uploads/2022/08/DSC_8149-900x604.jpg)

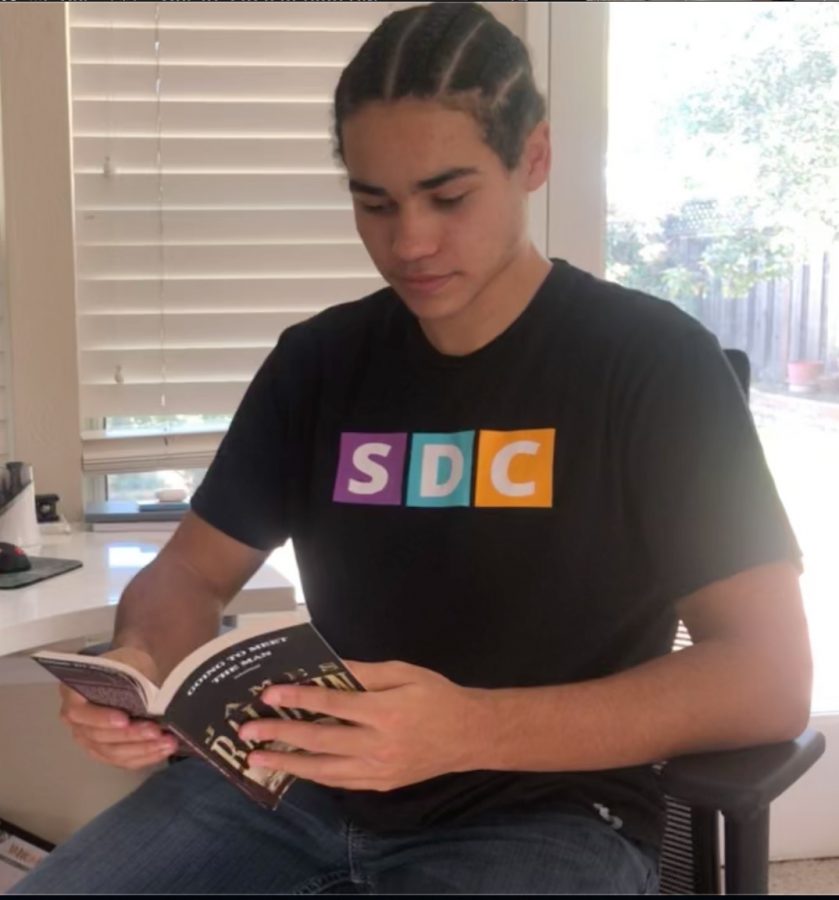

![“When I came into high school, I was ready to be a follower. But DECA was a game changer for me. It helped me overcome my fear of public speaking, and it's played such a major role in who I've become today. To be able to successfully lead a chapter of 150 students, an officer team and be one of the upperclassmen I once really admired is something I'm [really] proud of,” Anvitha Tummala ('21) said.](https://harkeraquila.com/wp-content/uploads/2021/07/Screen-Shot-2021-07-25-at-9.50.05-AM-900x594.png)

![“I think getting up in the morning and having a sense of purpose [is exciting]. I think without a certain amount of drive, life is kind of obsolete and mundane, and I think having that every single day is what makes each day unique and kind of makes life exciting,” Neymika Jain (12) said.](https://harkeraquila.com/wp-content/uploads/2017/06/Screen-Shot-2017-06-03-at-4.54.16-PM.png)

![“My slogan is ‘slow feet, don’t eat, and I’m hungry.’ You need to run fast to get where you are–you aren't going to get those championships if you aren't fast,” Angel Cervantes (12) said. “I want to do well in school on my tests and in track and win championships for my team. I live by that, [and] I can do that anywhere: in the classroom or on the field.”](https://harkeraquila.com/wp-content/uploads/2018/06/DSC5146-900x601.jpg)

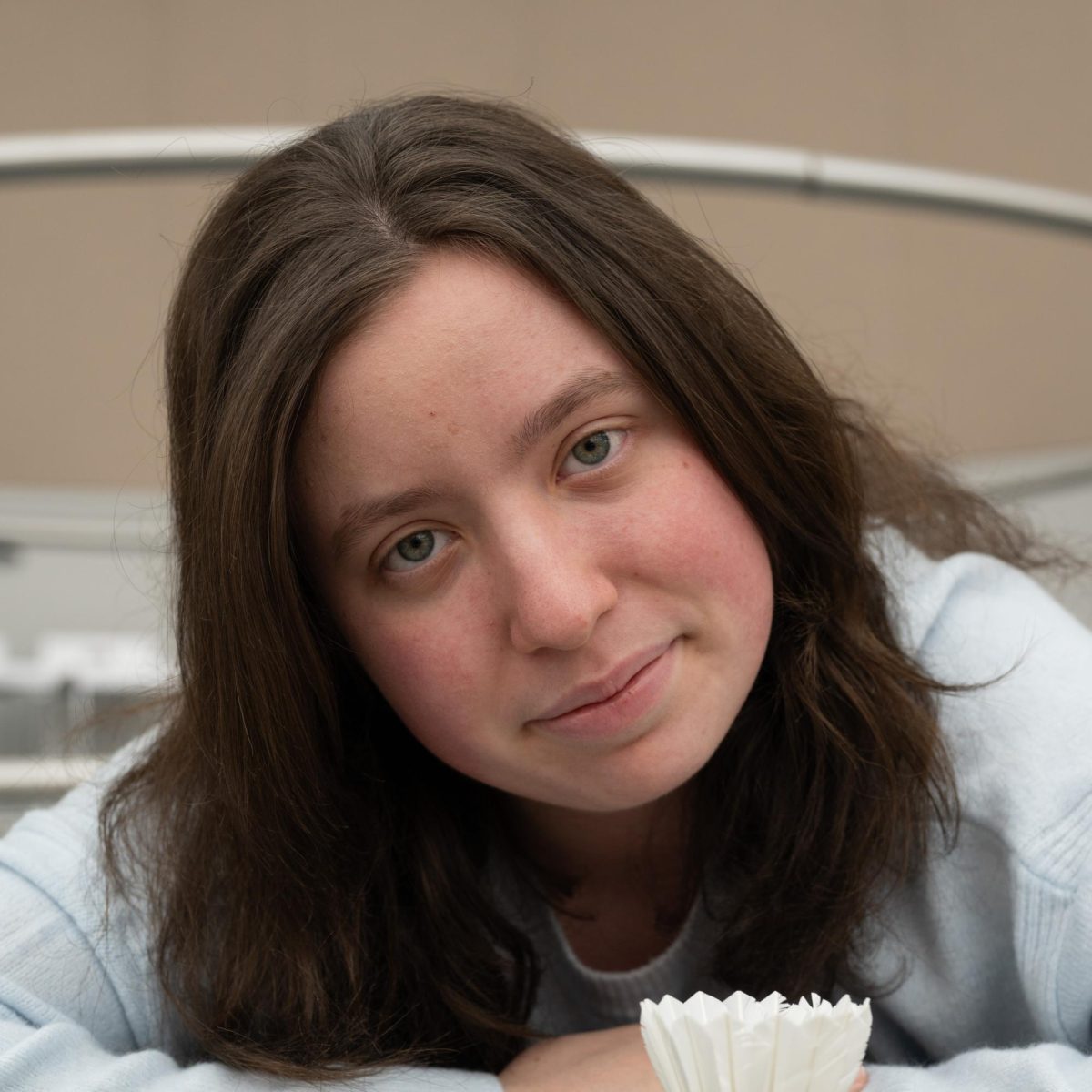

![“[Volleyball has] taught me how to fall correctly, and another thing it taught is that you don’t have to be the best at something to be good at it. If you just hit the ball in a smart way, then it still scores points and you’re good at it. You could be a background player and still make a much bigger impact on the team than you would think,” Anya Gert (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AnnaGert_JinTuan_HoHPhotoEdited-600x900.jpeg)

![“I'm not nearly there yet, but [my confidence has] definitely been getting better since I was pretty shy and timid coming into Harker my freshman year. I know that there's a lot of people that are really confident in what they do, and I really admire them. Everyone's so driven and that has really pushed me to kind of try to find my own place in high school and be more confident,” Alyssa Huang (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AlyssaHuang_EmilyChen_HoHPhoto-900x749.jpeg)