Moral dilemmas arise with new self-driving cars and advanced artificial intelligence

September 3, 2018

In October 2017, a bill titled the “AV Start Act,” designed to remove some restrictions on the operation of self-driving car technology, unanimously passed the Senate Commerce Committee in a significant legislative step forward for the future of autonomous technologies.

Backing to bring the bill to a vote stalled when a self-driving Uber car fatally hit 49-year-old Elaine Herzberg in Tempe, Arizona in March of 2018, with self-driving technologies receiving more pushback. But now, in order to ensure the bill’s passage, proponents of the act are trying to attach the bill to a larger package which will certainly be passed. This back-and-forth on the bill’s prospects now brings special attention to the ethical issues surrounding autonomous vehicles.

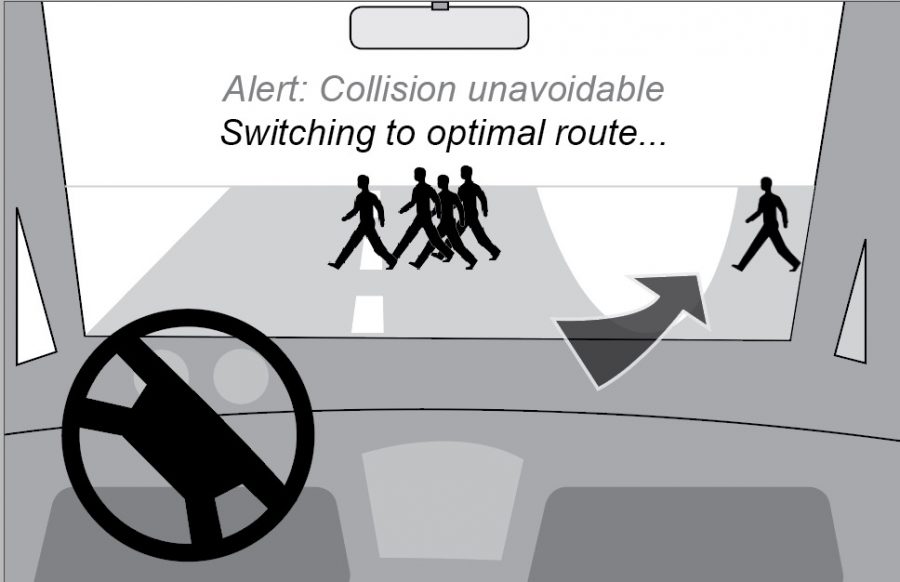

One of these concerns involves the trolley problem, a thought experiment that has challenged ethicists for over 50 years. In the original version of this infamous experiment, a trolley is barreling down a track which would lead it to crash into five people, and a human is placed in the situation where she can switch the tracks and cause the trolley to hit only one person.

The thought experiment originally also caused dialogue and studies about the nature of what a human would do if put in such a situation. Now, the problem prompts questions about how an autonomous car would deal with situations like this. What type of decision would it make? Would it allow more people to die or would it risk receiving culpability and save more lives?

In addressing the ethical problem, some simply question the practicality of such a thought experiment, and thus whether it is worth discussing.

“When talking about self-driving car problems, a lot people draw up scenarios such as the trolley problem,” Leilani Hendrina Gilpin, a Ph.D student at MIT currently focusing her research on autonomous machines, said. “Those kinds of things don’t really happen in real life, so they aren’t the most meaningful though they do get a lot of press right now.”

Some also believe the only way to solve such a dilemma is to have more advanced self-driving cars that are perfect or close to perfect.

“As humans we can reason that, ‘Oh, this person wanted to save more lives’ or if they didn’t, maybe we would say ‘They were overwhelmed with the decision.’ We are pretty tolerable with humans, while we aren’t tolerable with fallible machines,” former ethics and philosophy teacher Dr. Shaun Jahshan said. “I think what the industry will demand is infallible machines, where there are plenty of failsafes and extra precautions.”

When self-driving cars do make mistakes, however, another host of questions come with them. The most prominent of these being: whose fault is it when a problem occurs with an autonomous vehicle? This question of blame often becomes tangled between software, hardware, and the human driver.

“In the UBER accident, the lidar did detect a human, but the software characterized it as a false positive, so this question is a very interesting one because who is at fault there?” Gilpin said. “I think we need more information to do answer these questions. I also believe that a really big thing is that we need to make a lot of these data records public.”

Others say that these problems will be encountered and dealt with through time and law.

“I think the same issues of fault apply to the technology we have now, so we will have to build a body of law, of precedent, like we have now for other things.” Dr. Jahshan said. “What will happen is that, year by year, we’ll get more used to this idea of having self-driving cars that function reasonably well most of the time. And as we go through cycles of discussion about these things, then we will be able to come up with norms.”

This problem of blame ties into accountability issues, which may restrain the future autonomy of such vehicles.

“I think taking human drivers completely out of the picture will happen in the distant future. What we’ve seen, say even in the UBER accident, is that when we have humans in the loop, they aren’t perfect at all.” Gilpin said. “However, it’s very easy to blame a person in the front seat if anything goes wrong. I think a big reason that drivers will be around for a long time is liability.”

This piece was originally published in the pages of The Winged Post on Aug. 31, 2018.

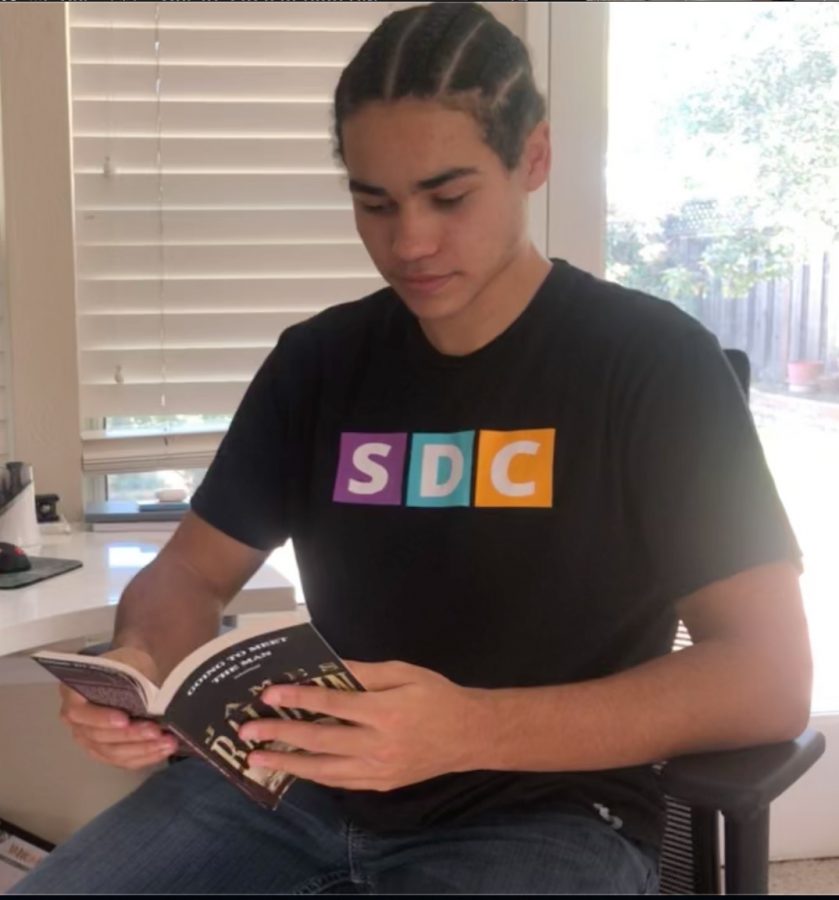

![“[Building nerf blasters] became this outlet of creativity for me that hasn't been matched by anything else. The process [of] making a build complete to your desire is such a painstakingly difficult process, but I've had to learn from [the skills needed from] soldering to proper painting. There's so many different options for everything, if you think about it, it exists. The best part is [that] if it doesn't exist, you can build it yourself," Ishaan Parate said.](https://harkeraquila.com/wp-content/uploads/2022/08/DSC_8149-900x604.jpg)

![“When I came into high school, I was ready to be a follower. But DECA was a game changer for me. It helped me overcome my fear of public speaking, and it's played such a major role in who I've become today. To be able to successfully lead a chapter of 150 students, an officer team and be one of the upperclassmen I once really admired is something I'm [really] proud of,” Anvitha Tummala ('21) said.](https://harkeraquila.com/wp-content/uploads/2021/07/Screen-Shot-2021-07-25-at-9.50.05-AM-900x594.png)

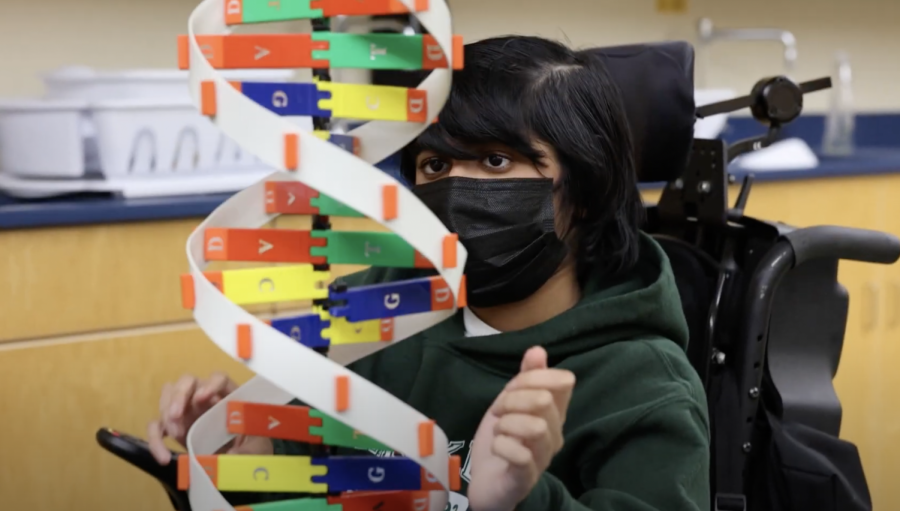

![“I think getting up in the morning and having a sense of purpose [is exciting]. I think without a certain amount of drive, life is kind of obsolete and mundane, and I think having that every single day is what makes each day unique and kind of makes life exciting,” Neymika Jain (12) said.](https://harkeraquila.com/wp-content/uploads/2017/06/Screen-Shot-2017-06-03-at-4.54.16-PM.png)

![“My slogan is ‘slow feet, don’t eat, and I’m hungry.’ You need to run fast to get where you are–you aren't going to get those championships if you aren't fast,” Angel Cervantes (12) said. “I want to do well in school on my tests and in track and win championships for my team. I live by that, [and] I can do that anywhere: in the classroom or on the field.”](https://harkeraquila.com/wp-content/uploads/2018/06/DSC5146-900x601.jpg)

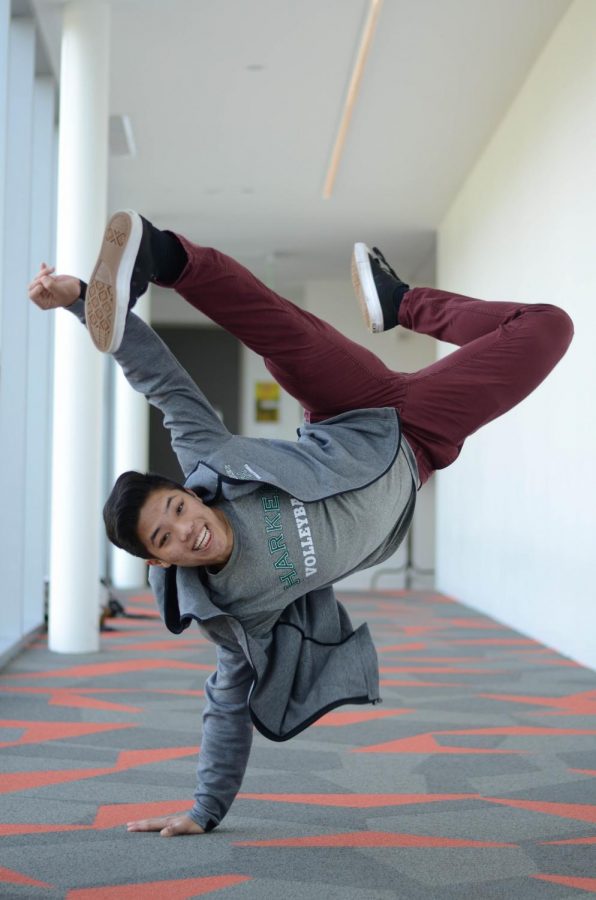

![“[Volleyball has] taught me how to fall correctly, and another thing it taught is that you don’t have to be the best at something to be good at it. If you just hit the ball in a smart way, then it still scores points and you’re good at it. You could be a background player and still make a much bigger impact on the team than you would think,” Anya Gert (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AnnaGert_JinTuan_HoHPhotoEdited-600x900.jpeg)

![“I'm not nearly there yet, but [my confidence has] definitely been getting better since I was pretty shy and timid coming into Harker my freshman year. I know that there's a lot of people that are really confident in what they do, and I really admire them. Everyone's so driven and that has really pushed me to kind of try to find my own place in high school and be more confident,” Alyssa Huang (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AlyssaHuang_EmilyChen_HoHPhoto-900x749.jpeg)