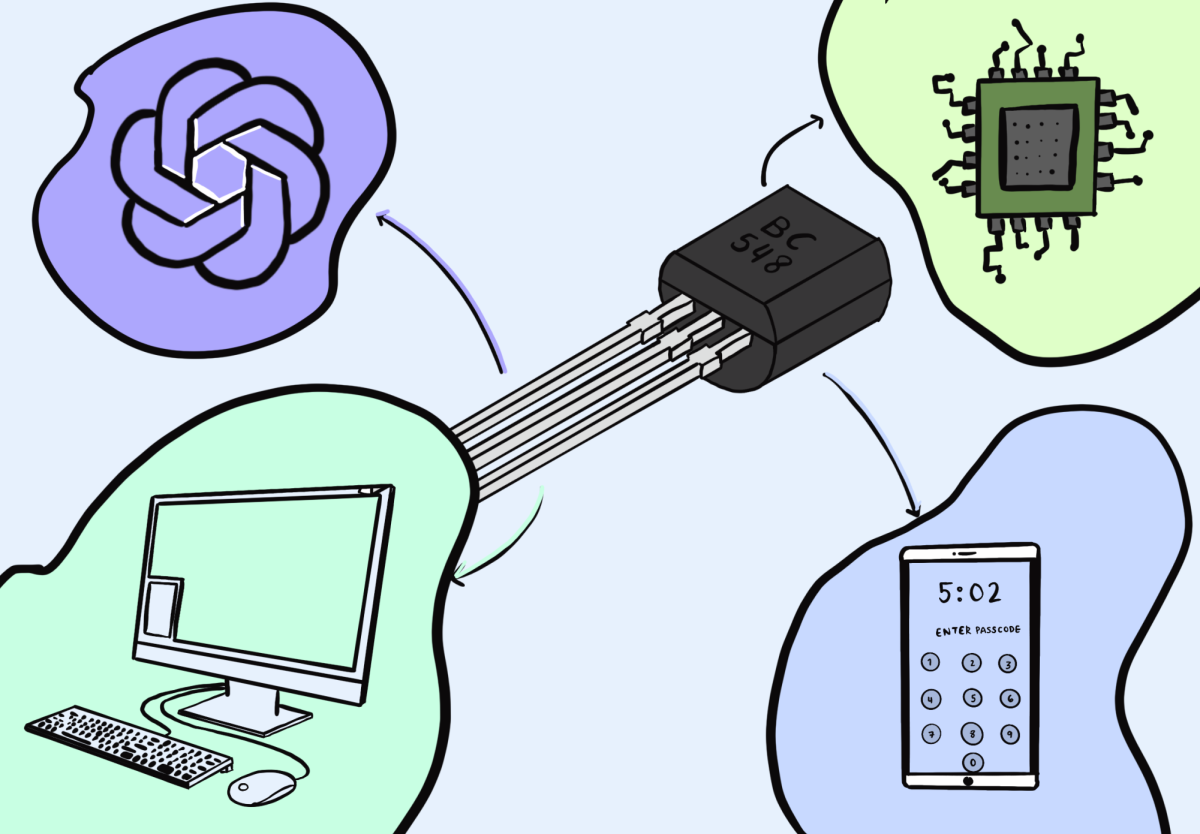

Ever wonder how humans went from the massive computers of the 1940s to the handheld smartphones of the modern era? The answer lies within the transistor. In every electronic device, from cell phones to smart fridges, these components are building blocks of modern technology.

75 years ago, American physicists William Shockley, Walter Brittain and John Bardeen from Bell Labs demonstrated the first working semiconductor transistor as a “magnificent Christmas present” to their executives, according to Shockley. This prototype, known as the point-contact transistor, consisted of a block of germanium, a semiconductor and gold foil wrapped around a spring-held plastic wedge designed to make point contact with the base in order to facilitate the flow of current.

In January 1948, Shockley, the lead physicist of the research group, developed a more robust version named the junction transistor. These smaller, more reliable junction transistors replaced unstable, bulky vacuum tubes, allowing for more compact and efficient computer designs.

In 1958, Jack Kilby, employee at Texas Instruments and future Nobel Prize laureate, and Robert Noyce, co-founder of Fairchild Semiconductor and Intel Corporation, independently developed methods to integrate, or group, transistors on silicon substrates to form integrated circuits (IC).

The basis of transistors lies within semiconductors (most commonly silicon), which only conducts electricity in certain conditions. Pure silicon alone conducts current poorly, so impurities are introduced to the material in a process called doping, forming two types of semiconductors: p-type and n-type. When put side by side, they form a PN junction, and this configuration allows amplification of current as well as the regulation of its flow.

Nowadays, highly complicated integrated circuits contain billions of transistors on one chip. Nvidia’s H100 GPU, the computing engine behind AI, utilizes 80 billion transistors.

“We used to build things with either relays, mechanical switches or vacuum tubes, which are electrically controlled switches, but they’re big and finicky,” upper school computer science teacher Marina Peregrino said. “To get the computers to function, they’d have to go over and replace all the broken vacuum tubes, and same with mechanical relay. Something [physical] is actually switching, so that’s what wears out and breaks them down. A transistor is just a crystal. If you use them properly, they don’t wear out, because there’s nothing moving.”

So how exactly is this feat possible? Wafer fabrication squeezes billions of nanometer-wide transistors onto miniature computer chips.

First, companies produce a silicon wafer: a sheet of the semiconductor material which serves as the foundation for the integrated circuit. A rotating machine then coats the circular wafer with photoresist, a substance that softens upon exposure to ultraviolet light. Next, they shine light through masks containing circuit patterns onto the wafer, similar to how graffiti artists use stencils to paint specific shapes onto a surface. After a chemical wash removes the photoresist, they fill the gaps of the imprinted patterns with a conductive metal, most commonly copper.

Moore’s Law, created by and named after Intel’s co-founder Gordon Moore, empirically predicts that the number of total transistors on an integrated circuit doubles approximately every two years, a trend that has continued since the 1970s. The law has developed into a self-fulfilling prophecy, as engineers base their advancements off these expectations, attempting to meet the benchmark each year.

Upper school robotics instructor Martin Baynes, who worked in the semiconductor industry for almost two decades and stood in the same room as Moore during Baynes’ career at Fairchild Semiconductor, shares his perspective on the law.

“Gordon Moore was a visionary, and he could see, without knowing the details, that problems were solvable,” Baynes said. “Effectively, what Gordon Moore did was he said, ‘This is the hurdle we’re going to jump in two years, this is the hurdle we’re going to jump in four years,’ Baynes said. “The industry can work at that kind of pace. He wasn’t telling the industry how to do it, but he could see that that could be done right.”

But growth inevitably slows down. Transistors can’t continue to shrink. The debate over when exactly Moore’s law will die has long since existed within the scientific community. Many researchers worry that the law will become obsolete. Some claim it has already ended. Others, including Moore himself, predict that it will halt in the near future. Baynes remains optimistic that improvements will continue to happen.

“I think Moore’s law will continue to hold because it’s not a physical law,” Baynes said. “It’s a law of business behavior … For a number of decades, people have been saying you can’t pass this point, and we keep passing them. So I think it will hold. It may tail off a bit, but it’s going to hold on by and large.”

Besides everyday electronic devices, transistors also drive the AI revolution. Intelligences like ChatGPT or OpenArt seem smooth and efficient, yet behind the scenes, these robots need a vast amount of data. Without billions of transistors, processing hundreds of gigabytes would take an immeasurable amount of time.

“A lot of the ideas and math behind AI was developed even before we really had access to so much computing power, but it wasn’t really feasible,” Peregrino said. “Now it’s feasible. For example, with wave functions, you could have a great wave function with mathematics, but [you] can’t solve it. Well, you could put all this data into the computer, and the computer can march through and estimate the solution to that. Science increases engineering, engineering increases science, and so it snowballs.”

Recent developments in transistor production show this self-perpetuating growth. Companies are experimenting with artificial intelligence to resolve design issues.

For instance, light diffraction poses a major problem when shining light through small and intricate mask patterns. Gaps in the “stencil” are so small (often 2 angstroms, or 0.2 nanometers wide) that it interferes with light waves, spreading them out and essentially blurring the focus. Artificial intelligence may help compensate for these difficulties and improve transistor technology, which leads to enhancements in AI.

Whether or not development continues or slows, transistors remain the cornerstone of technology. They enable key functionalities in computers and phones and help programs perform calculations.

“I have a feeling transistors will stay important just because they’re so versatile,” AI Club Lecturer Aniketh Tummala (12) said. “You can make logic circuits out of transistors, memory too, so they’ll definitely play a heavy hand. I don’t see us phasing them out anytime soon.”

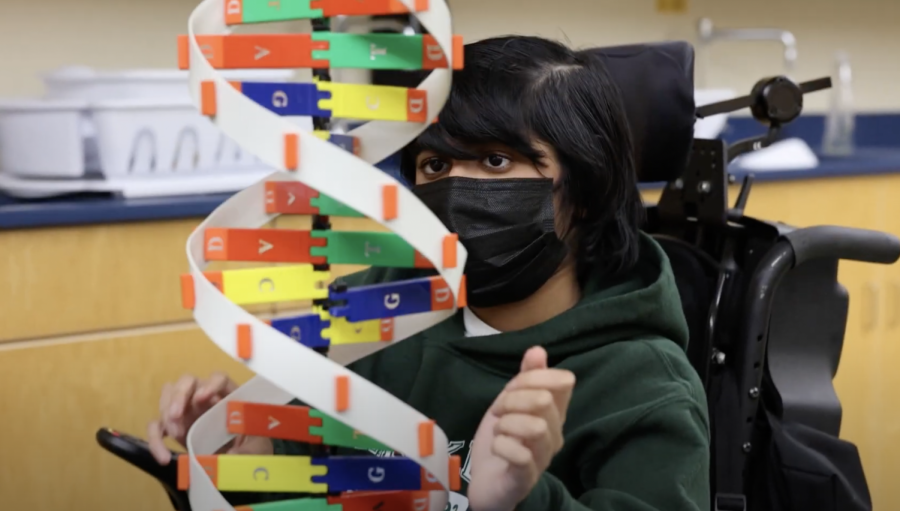

![“[Building nerf blasters] became this outlet of creativity for me that hasn't been matched by anything else. The process [of] making a build complete to your desire is such a painstakingly difficult process, but I've had to learn from [the skills needed from] soldering to proper painting. There's so many different options for everything, if you think about it, it exists. The best part is [that] if it doesn't exist, you can build it yourself," Ishaan Parate said.](https://harkeraquila.com/wp-content/uploads/2022/08/DSC_8149-900x604.jpg)

![“When I came into high school, I was ready to be a follower. But DECA was a game changer for me. It helped me overcome my fear of public speaking, and it's played such a major role in who I've become today. To be able to successfully lead a chapter of 150 students, an officer team and be one of the upperclassmen I once really admired is something I'm [really] proud of,” Anvitha Tummala ('21) said.](https://harkeraquila.com/wp-content/uploads/2021/07/Screen-Shot-2021-07-25-at-9.50.05-AM-900x594.png)

![“I think getting up in the morning and having a sense of purpose [is exciting]. I think without a certain amount of drive, life is kind of obsolete and mundane, and I think having that every single day is what makes each day unique and kind of makes life exciting,” Neymika Jain (12) said.](https://harkeraquila.com/wp-content/uploads/2017/06/Screen-Shot-2017-06-03-at-4.54.16-PM.png)

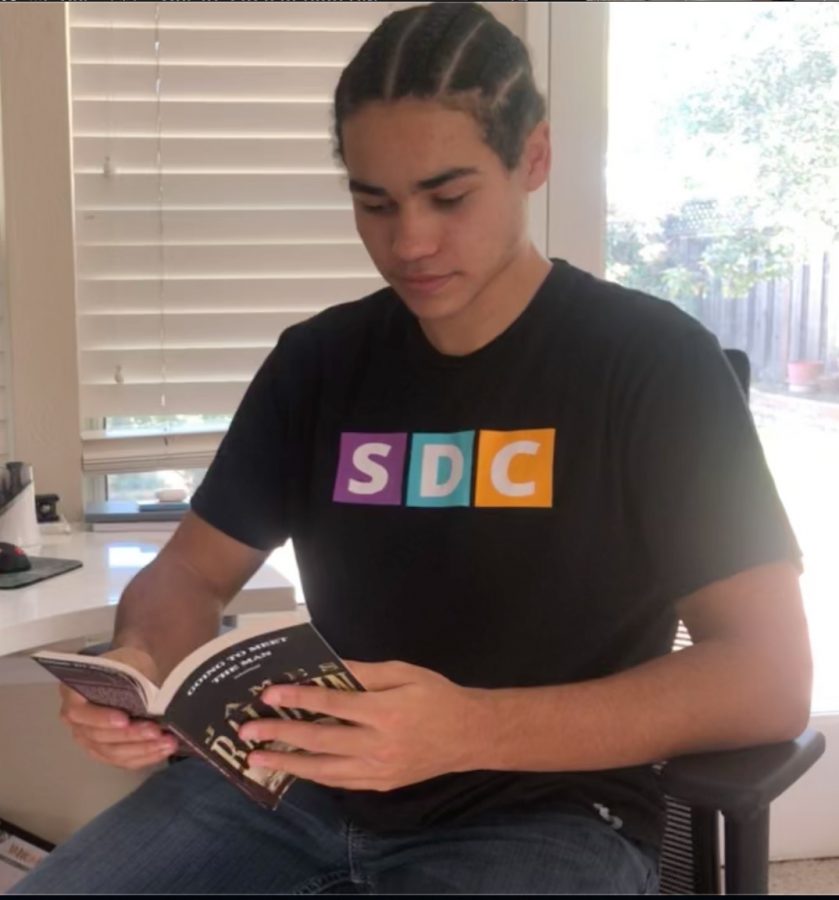

![“My slogan is ‘slow feet, don’t eat, and I’m hungry.’ You need to run fast to get where you are–you aren't going to get those championships if you aren't fast,” Angel Cervantes (12) said. “I want to do well in school on my tests and in track and win championships for my team. I live by that, [and] I can do that anywhere: in the classroom or on the field.”](https://harkeraquila.com/wp-content/uploads/2018/06/DSC5146-900x601.jpg)

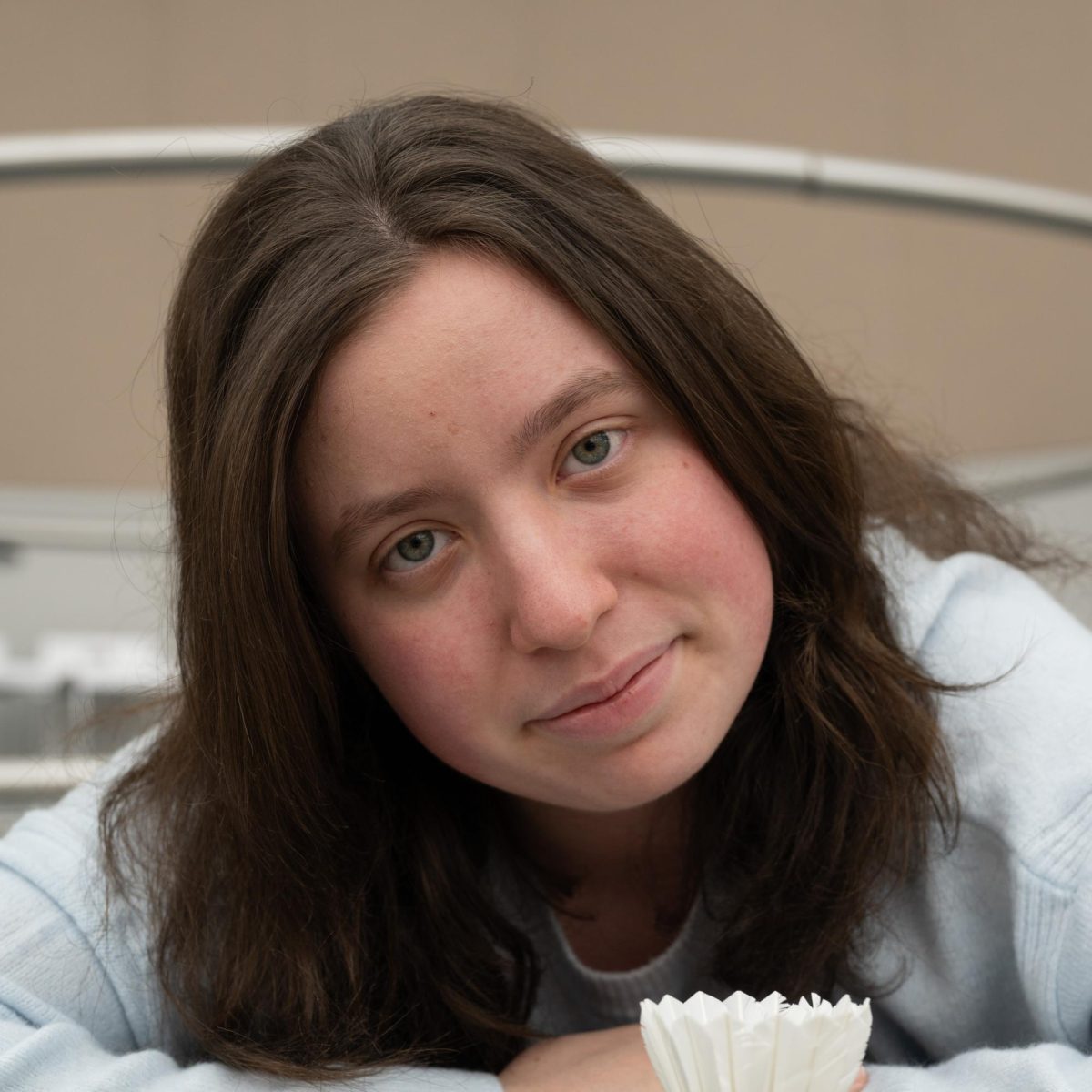

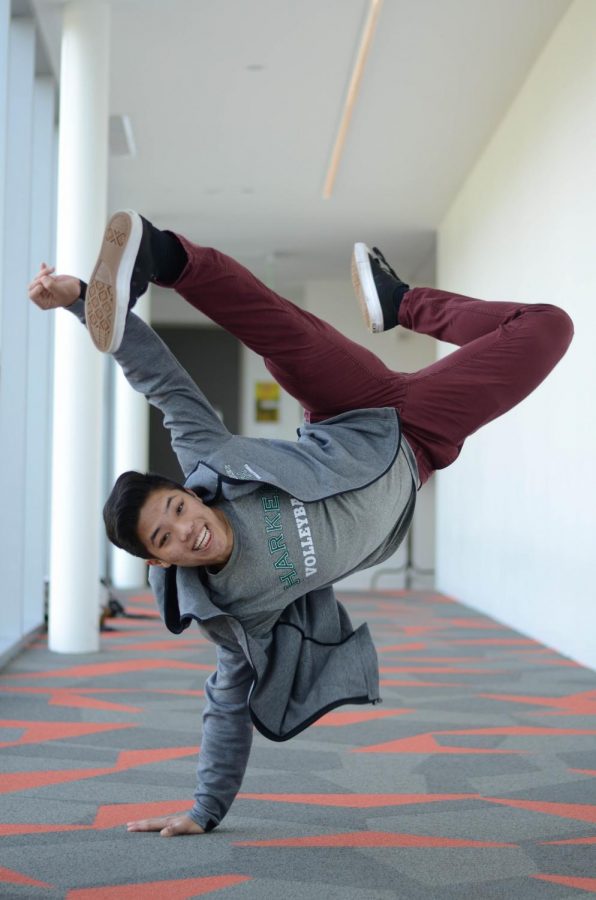

![“[Volleyball has] taught me how to fall correctly, and another thing it taught is that you don’t have to be the best at something to be good at it. If you just hit the ball in a smart way, then it still scores points and you’re good at it. You could be a background player and still make a much bigger impact on the team than you would think,” Anya Gert (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AnnaGert_JinTuan_HoHPhotoEdited-600x900.jpeg)

![“I'm not nearly there yet, but [my confidence has] definitely been getting better since I was pretty shy and timid coming into Harker my freshman year. I know that there's a lot of people that are really confident in what they do, and I really admire them. Everyone's so driven and that has really pushed me to kind of try to find my own place in high school and be more confident,” Alyssa Huang (’20) said.](https://harkeraquila.com/wp-content/uploads/2020/06/AlyssaHuang_EmilyChen_HoHPhoto-900x749.jpeg)